Yann Neuhaus

Using local images with nerdctl build?

Following the issue I talked about in my last blog, I started building local images using “nerdctl” for OpenText Documentum 23.4. That worked properly for the first one, which was using the Red Hat base, to install some common OS packages used by for components/containers of Documentum. However, when I tried to do the next one, based on it, to start installing an Oracle Client specifically for the Documentum Content Server container, it failed with this error:

Mac:ora-client$ ./build.sh

************************************************

*** Building the image 'ora-client:19.3.0.0' ***

************************************************

[+] Building 2.2s (3/3) FINISHED

=> [internal] load build definition from Dockerfile 0.1s

=> => transferring dockerfile: 1.42kB 0.0s

=> [internal] load .dockerignore 0.1s

=> => transferring context: 2B 0.0s

=> ERROR [internal] load metadata for registry-sbx.it.dbi-services.com/dbi_dctm/linux-ot:8.10-901 2.1s

------

> [internal] load metadata for registry-sbx.it.dbi-services.com/dbi_dctm/linux-ot:8.10-901:

------

Dockerfile:12

--------------------

10 |

11 | ARG ARG_BASE_IMAGE

12 | >>> FROM $ARG_BASE_IMAGE

13 |

14 | LABEL maintainer="dbi ECM & Application Integration <dbi_eai@dbi-services.com>"

--------------------

error: failed to solve: registry-sbx.it.dbi-services.com/dbi_dctm/linux-ot:8.10-901: failed to do request: Head "https://registry-sbx.it.dbi-services.com/v2/dbi_dctm/linux-ot/manifests/8.10-901": x509: certificate is valid for ingress.local, not registry-sbx.it.dbi-services.com

FATA[0002] no image was built

Error: exit status 1

FATA[0000] failed to create a tmp single-platform image "registry-sbx.it.dbi-services.com/dbi_dctm/ora-client:19.3.0.0-tmp-reduced-platform": image "registry-sbx.it.dbi-services.com/dbi_dctm/ora-client:19.3.0.0": not found

Error: exit status 1

***********************************************************

*** Script completed for 'dbi_dctm/ora-client:19.3.0.0' ***

***********************************************************

Mac:ora-client$

The above “nerdctl build” command fails on the “FROM $ARG_BASE_IMAGE” where the base image is obviously the one I just built a few minutes before. The error is the same as for the previous blog, related to the Self-Signed SSL Certificate of the private registry. Maybe adding the “insecure-registry” parameter to the “nerdctl build” command could help workaround the security checks (Note: I tested for the sake of completeness, but it’s not possible). However, I didn’t do that in my previous blog as for me, it should only be required if you really/absolutely need to talk to the private registry… Since I just built the base image locally, why the hell would it need to be re-downloaded? That doesn’t make a lot of sense…

Just to confirm, I checked the local images, to make sure it’s tagged properly and available:

Mac:ora-client$ nerdctl images

REPOSITORY TAG IMAGE ID CREATED PLATFORM SIZE BLOB SIZE

registry-sbx.it.dbi-services.com/dbi_dctm/linux-ot 8.10-901 9b9b6662b7a7 9 minutes ago linux/amd64 1.2 GiB 347.6 MiB

Mac:ora-client$

So, it is indeed there. I looked at Google again and found several people saying that “nerdctl” isn’t able to use local images at all… However, I also saw some people talking about a “buildkit” namespace and I remembered seeing the namespace being “default” on my system information:

Mac:ora-client$ nerdctl system info

Client:

Namespace: default

Debug Mode: false

Server:

...

Mac:ora-client$

Mac:ora-client$ nerdctl version

Client:

Version: v1.7.3

OS/Arch: linux/amd64

Git commit: 0a464409d0178e16d3d2bed36222937ec3fc9c77

buildctl:

Version: v0.12.5

GitCommit: bac3f2b673f3f9d33e79046008e7a38e856b3dc6

Server:

containerd:

Version: v1.7.10

GitCommit: 4e1fe7492b9df85914c389d1f15a3ceedbb280ac

runc:

Version: 1.1.10

GitCommit: 18a0cb0f32bcac2ecc9a10f327d282759c144dab

Mac:ora-client$

Therefore, I thought I could try to list the images while specifying all namespaces to see what the outcome would be. Since I had no idea about which namespaces were present, I looked at the help of “nerdctl” and saw only “moby”, “k8s.io” and “default”. No mention of “buildkit” but I still tried it anyway to see if it would throw an error:

Mac:ora-client$ nerdctl --help | grep -i namespace

namespace Manage containerd namespaces

-n, --n string Alias of --namespace (default "default")

--namespace string containerd namespace, such as "moby" for Docker, "k8s.io" for Kubernetes [$CONTAINERD_NAMESPACE] (default "default")

Mac:ora-client$

Mac:ora-client$ nerdctl --namespace moby image ls

REPOSITORY TAG IMAGE ID CREATED PLATFORM SIZE BLOB SIZE

Mac:ora-client$

Mac:ora-client$ nerdctl --namespace k8s.io image ls

REPOSITORY TAG IMAGE ID CREATED PLATFORM SIZE BLOB SIZE

Mac:ora-client$

Mac:ora-client$ nerdctl --namespace default images

REPOSITORY TAG IMAGE ID CREATED PLATFORM SIZE BLOB SIZE

registry-sbx.it.dbi-services.com/dbi_dctm/linux-ot 8.10-901 9b9b6662b7a7 18 minutes ago linux/amd64 1.2 GiB 347.6 MiB

Mac:ora-client$

Mac:ora-client$ nerdctl --namespace buildkit image ls

REPOSITORY TAG IMAGE ID CREATED PLATFORM SIZE BLOB SIZE

Mac:ora-client$

What I got from that output is that the image is currently only visible on the default namespace, I assume because I didn’t specify any in the build command. What would happen if I put that image into the “buildkit” namespace instead? I saw on the Rancher Desktop documentation that it is possible to move an image to another environment or container engine, so I tried to execute that between namespaces:

Mac:ora-client$ nerdctl --namespace default save -o image.tar registry-sbx.it.dbi-services.com/dbi_dctm/linux-ot:8.10-901

Mac:ora-client$

Mac:ora-client$ nerdctl --namespace buildkit image ls

REPOSITORY TAG IMAGE ID CREATED PLATFORM SIZE BLOB SIZE

Mac:ora-client$

Mac:ora-client$ nerdctl --namespace buildkit load -i image.tar

unpacking registry-sbx.it.dbi-services.com/dbi_dctm/linux-ot:8.10-901 (sha256:9b9b6662b7a790c39882f8b4fd22e2b85bd4c419b6f6ffd350e447c35b2650f7)...

Loaded image: registry-sbx.it.dbi-services.com/dbi_dctm/linux-ot:8.10-901

Mac:ora-client$

Mac:ora-client$ nerdctl --namespace buildkit image ls

REPOSITORY TAG IMAGE ID CREATED PLATFORM SIZE BLOB SIZE

registry-sbx.it.dbi-services.com/dbi_dctm/linux-ot 8.10-901 9b9b6662b7a7 21 seconds ago linux/amd64 1.2 GiB 347.6 MiB

Mac:ora-client$

Mac:ora-client$ rm image.tar

Mac:ora-client$

Now that the image is showing up on the “buildkit” namespace, would the build work? I simply re-executed the exact same command as before and this time it was successful:

[+] Building 149.2s (8/8) FINISHED

=> [internal] load build definition from Dockerfile 0.1s

=> => transferring dockerfile: 1.42kB 0.0s

=> [internal] load .dockerignore 0.1s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for registry-sbx.it.dbi-services.com/dbi_dctm/linux-ot:8.10-901 4.2s

=> [internal] load build context 0.4s

=> => transferring context: 21.51kB 0.4s

=> [1/3] FROM registry-sbx.it.dbi-services.com/dbi_dctm/linux-ot:8.10-901@sha256:9b9b6662b7a790c39882f8b4fd2 4.2s

=> => resolve registry-sbx.it.dbi-services.com/dbi_dctm/linux-ot:8.10-901@sha256:9b9b6662b7a790c39882f8b4fd2 4.2s

...

=> => exporting layers 0.3s

=> => exporting manifest sha256:d6fc394f3c144735900f521d3dc603ef1b890e3460d4b94d226f9395de7ad1f3 0.1s

=> => exporting config sha256:67a60b84eacc425311471cd18fb538419e71cb1e1f4245fa21ad2215b93939f4 0.0s

=> => sending tarball 28.5s

However, the newly created image showed up on the “default” namespace. Therefore, I proceeded to add the command line parameter “–namespace buildkit” to both my “nerdctl build” and “nerdctl push” commands, so that it would create the images into the “buildkit” namespace and use these images to push them into the private registry. That way, it can use the local images and not download anything. Therefore, the updated and final commands for this second image build looks like this:

nerdctl --namespace buildkit build --no-cache --rm \

--tag="${REGISTRY}/${OWNER}/${NAME}" \

--build-arg ARG_BASE_IMAGE="${REGISTRY}/${OWNER}/${BASE_IMAGE}" \

--build-arg ARG_ARTIFACTORY_URL="${ARTIFACTORY_URL}" \

--build-arg ARG_ORACLE_VERSION="${ORACLE_VERSION}" \

--build-arg ARG_ORACLE_VERSION_DIR="${ORACLE_VERSION_DIR}" \

--build-arg ARG_ORACLE_PACKAGE_1="${ORACLE_PACKAGE_1}" \

--build-arg ARG_ORACLE_PACKAGE_2="${ORACLE_PACKAGE_2}" \

--build-arg ARG_ORACLE_BASE="${ORACLE_BASE}" \

--build-arg ARG_APP_OWNER="${APP_OWNER}" \

--build-arg ARG_DATA_BASE="${DATA_BASE}" .

echo

nerdctl --namespace buildkit push --insecure-registry ${REGISTRY}/${OWNER}/${NAME}

Just to make sure everything worked, I removed all local images and re-built the 1st and 2nd images from scratch. It behaved as expected, putting new images into the “buildkit” namespace and using them properly, without pulling anything from the private registry.

In conclusion, is it possible to make “nerdctl” use local images? I would say yes… But you have to specifically build your images into the “buildkit” namespace and not into the “default” one, otherwise it will not work. Why? No idea :D… As an additional note, this worked on my Mac using Rancher Desktop (with both old (1.9) and recent versions (1.13)) but when I tried it on a standard Linux VM without Rancher Desktop (just pure containerd/nerdctl), it didn’t work and was still trying to pull images from the registry, no matter what. Therefore, on the Linux VM, I switched to “podman” (yet another one… :D).

L’article Using local images with nerdctl build? est apparu en premier sur dbi Blog.

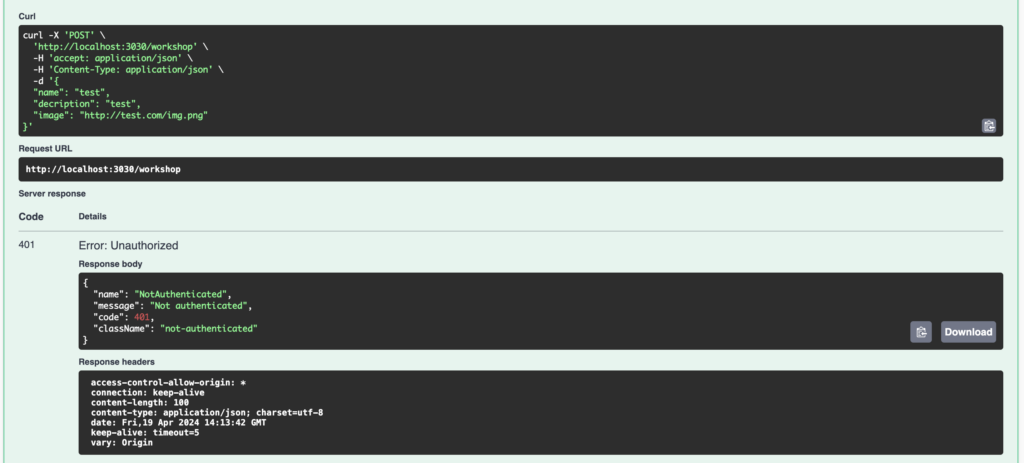

Push images to a Self-Signed Cert registry with Rancher Desktop

I recently had to work on creating some custom images for OpenText Documentum 23.4 that will run on Kubernetes but I faced some trouble while trying to push images with “dockerd” and “nerdctl”. Before I start talking about that, I clearly don’t consider myself as a Docker expert, of course I worked with it a bit in the past years but that’s pretty much it. Regarding my setup, I’m on Mac, I have Rancher Desktop on it, and I use that for its embedded container engine, which is (and was for me) “dockerd” (mobyd) by default.

OpenText provide images for their Documentum software since a few years already (2019?) but at the beginning it wasn’t very usable (difficult to customize, lack of security controls, missing components, etc.). Therefore, for a few customers, we developed our own containers that are using the silent installations of Documentum and that worked pretty well, since there are ~80/100 Documentum environments at customers running and using our images. If you want to know more about the silent installation, I wrote a series of blogs back in 2018, it’s pretty old but still quite accurate. Despite the evolutions and improvements of OpenText Documentum images over the year, I still think there are a few pain points. Therefore, my goal here was to check/adapt our images for the recent version Documentum 23.4. A colleague from our DevOps team setup an internal Kubernetes environment with a private registry for me to start working on the build of the images.

For quick development/testing, I created a small shell script that simply trigger a build and a push of the image to the registry. The first image I needed to create is a base OS image that includes all the OS packages required to install and run a Documentum environment (common base image with some packages used by all containers of all Documentum components). I used Red Hat as the underlying OS as this is the one used mainly at our customers for support and compatibility reasons. The shell script is rather simple:

#!/bin/bash

# Build Arguments

export NAME="linux-ot:8.10-901"

export OWNER="dbi_dctm"

export REGISTRY="registry-sbx.it.dbi-services.com"

export BASE_IMAGE="registry.access.redhat.com/ubi8:8.10-901.1716482497"

export ENV="DEV"

# Build the image

echo "**********************************************"

echo "*** Building the image '${NAME}' ***"

echo "**********************************************"

echo

docker build --no-cache --rm=true --force-rm=true --squash \

--tag="${REGISTRY}/${OWNER}/${NAME}" \

--build-arg ARG_BASE_IMAGE="${BASE_IMAGE}" \

--build-arg ARG_ENV="${ENV}" .

echo

docker push ${REGISTRY}/${OWNER}/${NAME}

echo

echo "*********************************************************"

echo "*** Script completed for '${OWNER}/${NAME}' ***"

echo "*********************************************************"

The build part was a success but unfortunately for me, the push failed due to the Private Registry being setup on port 443 with a Self-Signed SSL Certificate… Therefore, I started looking all over google for ways to configure Docker properly to allow that but without much success. I found a few resources such as this one from Rancher directly, that is supposed to trust the Self-Signed SSL Certificate or this one to allow some registries or a few other ones that would suggest to add “insecure-registries” to the Docker configuration. I tried them all but none were sufficient. First of all, I tried to trust the Self-Signed SSL Certificate as indicated in the Rancher documentation:

Mac:linux-ot$ DOMAIN=registry-sbx.it.dbi-services.com

Mac:linux-ot$ PORT=443

Mac:linux-ot$

Mac:linux-ot$ cat /etc/default/docker

DOCKER_OPTS="$DOCKER_OPTS --insecure-registry=registry-sbx.it.dbi-services.com"

Mac:linux-ot$

Mac:linux-ot$ openssl s_client -showcerts -connect ${DOMAIN}:${PORT} < /dev/null 2> /dev/null | openssl x509 -outform PEM > ca.crt

Mac:linux-ot$

Mac:linux-ot$ sudo mkdir -p /etc/docker/certs.d/${DOMAIN}/

Mac:linux-ot$ sudo cp ca.crt /etc/docker/certs.d/${DOMAIN}/ca.crt

Mac:linux-ot$

Mac:linux-ot$ cat ca.crt | sudo tee -a /etc/ssl/certs/ca-certificates.crt

-----BEGIN CERTIFICATE-----

MIIDcDCCAligAwIBAgIRANOLAjECYd1TSCjCfF8uIcwwDQYJKoZIhvcNAQELBQAw

SzEQMA4GA1UEChMHQWNtZSBDbzE3MDUGA1UEAxMuS3ViZXJuZXRlcyBJbmdyZXNz

...

Z4As4XDW01De9zLd8t1RWriA3aBLtXIDcXSYgm6O1L6v2VyjzxSZiuIBiv9HlPQ5

/CrWUd8znYbe5Ur6v3kKv29yzc4=

-----END CERTIFICATE-----

Mac:linux-ot$

I also tried adding the “insecure-registries” in the Docker daemon.json that didn’t exist before:

Mac:linux-ot$ cat /etc/docker/daemon.json

{

"insecure-registries" : ["registry-sbx.it.dbi-services.com"]

}

Mac:linux-ot$

But despite all that, after the restart of Rancher Desktop, pushing the images still didn’t work. I also saw some references about needing to use “http://” in the Docker daemon.json “insecure-registries” configuration, even for https registries, but still no luck. The error was that the SSL Certificate received was valid for “ingress.local” but the registry used was “registry-sbx.it.dbi-services.com” and of course the same output was given even if I only executed the “docker push” command manually (outside of the script):

Mac:linux-ot$ ./build.sh

**********************************************

*** Building the image 'linux-ot:8.10-901' ***

**********************************************

WARNING: experimental flag squash is removed with BuildKit. You should squash inside build using a multi-stage Dockerfile for efficiency.

[+] Building 489.8s (10/10) FINISHED docker:rancher-desktop

=> [internal] load build definition from Dockerfile 0.1s

=> => transferring dockerfile: 3.12kB 0.0s

=> [internal] load .dockerignore 0.1s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for registry.access.redhat.com/ubi8:8.10-901.1716482497 3.3s

...

=> exporting to image 22.5s

=> => exporting layers 22.4s

=> => writing image sha256:03b090f94723c8947126cd7bfbc9a152612de44baab58417f85e7d1d2e46a5fa 0.0s

=> => naming to registry-sbx.it.dbi-services.com/dbi_dctm/linux-ot:8.10-901 0.0s

The push refers to repository [registry-sbx.it.dbi-services.com/dbi_dctm/linux-ot]

Get "https://registry-sbx.it.dbi-services.com/v2/": tls: failed to verify certificate: x509: certificate is valid for ingress.local, not registry-sbx.it.dbi-services.com

*********************************************************

*** Script completed for 'dbi_dctm/linux-ot:8.10-901' ***

*********************************************************

Mac:linux-ot$

Mac:linux-ot$ docker push registry-sbx.it.dbi-services.com/dbi_dctm/linux-ot:8.10-901

The push refers to repository [registry-sbx.it.dbi-services.com/dbi_dctm/linux-ot]

Get "https://registry-sbx.it.dbi-services.com/v2/": tls: failed to verify certificate: x509: certificate is valid for ingress.local, not registry-sbx.it.dbi-services.com

Mac:linux-ot$

At that time, nobody could change/fix the certificate used by the registry, but I knew some of my colleagues used “nerdctl” instead of “docker”, so I decided to try it:

Mac:linux-ot$ nerdctl images

FATA[0000] cannot access containerd socket "/run/k3s/containerd/containerd.sock" (hint: try running with `--address /var/run/docker/containerd/containerd.sock` to connect to Docker-managed containerd): no such file or directory

Error: exit status 1

Mac:linux-ot$

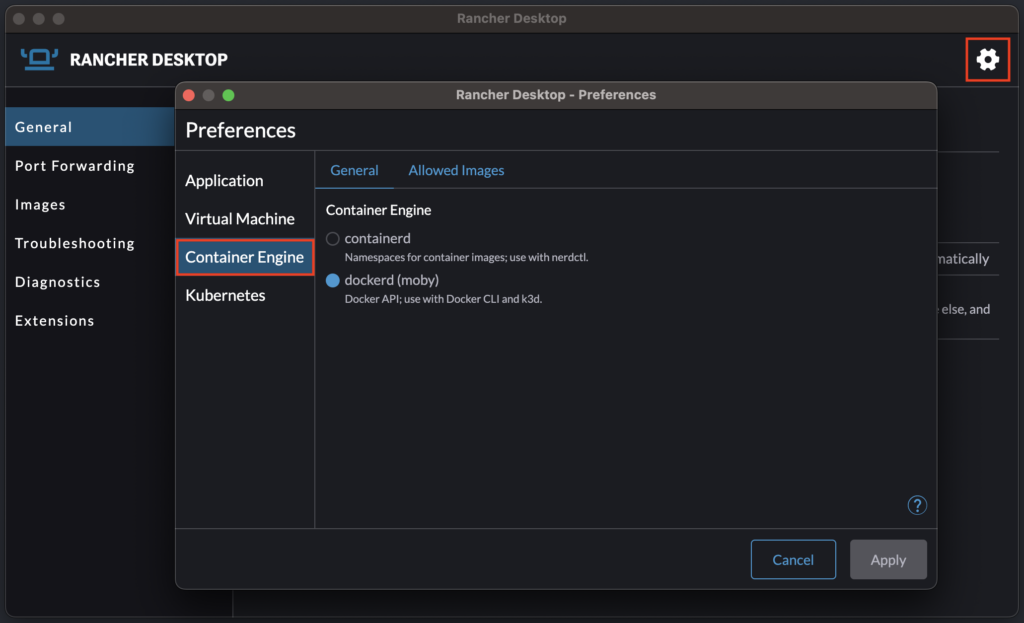

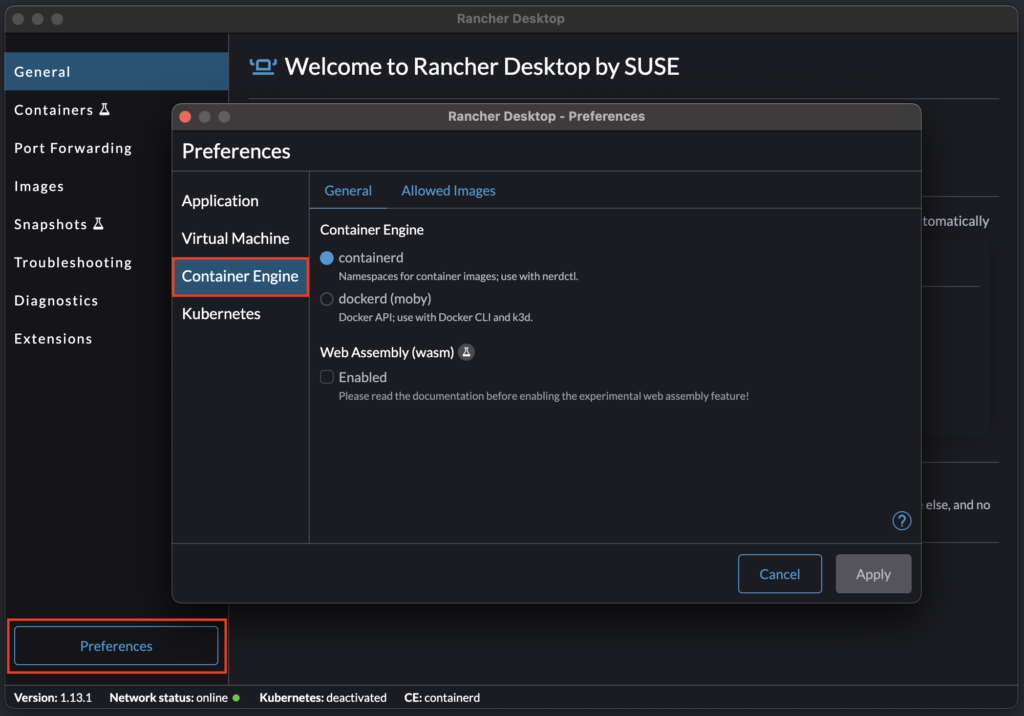

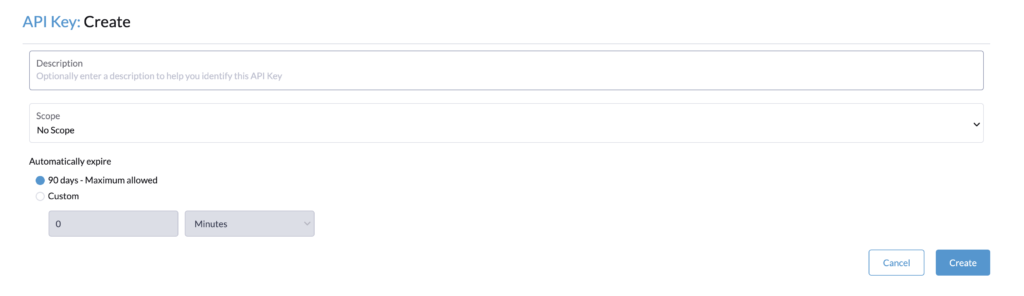

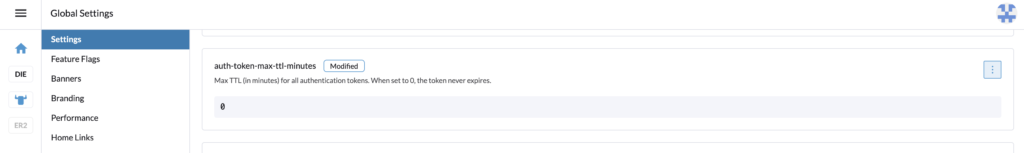

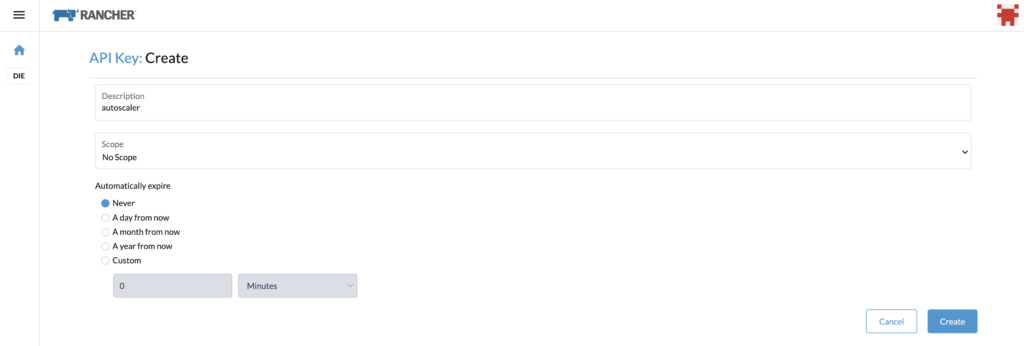

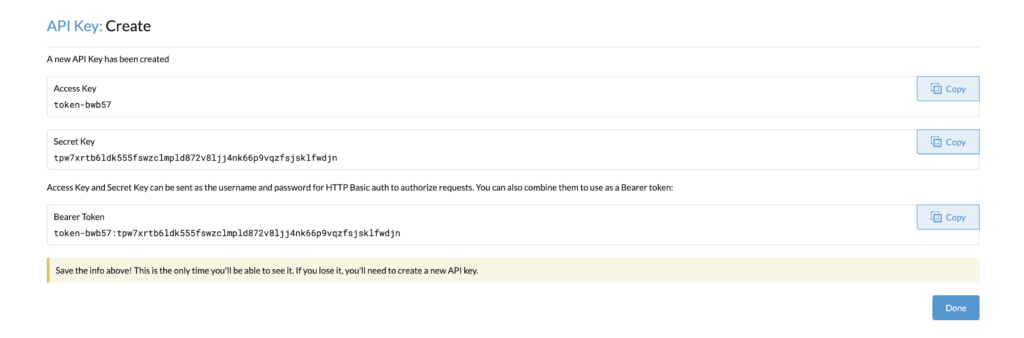

While looking into the options of Rancher Desktop, I saw it was possible to choose between two container engines. For that purpose, in the main window, click on the Gear on the top right corner (old versions of Rancher Desktop) or on the Preferences button on the bottom left corner (new versions of Rancher Desktop) and select Container Engine (or Rancher Desktop menu icon > Open preferences dialog > Container Engine). Here are screenshots of an old version of Rancher Desktop first, and then a newer/recent version of Rancher Desktop:

As you can see in the error above, “nerdctl” requires “containerd”. Therefore, I changed my container engine from “dockerd” to “containerd” and restarted Rancher Desktop. I had to change a little bit the build script since not all command line parameters are available in “nerdctl”, apparently, and I also took this opportunity to include the “insecure-registry” in the command line, as this is possible with “nerdctl” (not with “dockerd”). Therefore, my updated script looked like this:

...

nerdctl build --no-cache --rm \

--tag="${REGISTRY}/${OWNER}/${NAME}" \

--build-arg ARG_BASE_IMAGE="${BASE_IMAGE}" \

--build-arg ARG_ENV="${ENV}" .

echo

nerdctl push --insecure-registry ${REGISTRY}/${OWNER}/${NAME}

...

This time, the build and the push of the image with nerdctl were both successful:

Mac:linux-ot$ ./build.sh

**********************************************

*** Building the image 'linux-ot:8.10-901' ***

**********************************************

[+] Building 507.0s (10/10)

[+] Building 507.2s (10/10) FINISHED

=> [internal] load build definition from Dockerfile 0.2s

=> => transferring dockerfile: 3.12kB 0.1s

=> [internal] load .dockerignore 0.2s

=> => transferring context: 2B 0.1s

=> [internal] load metadata for registry.access.redhat.com/ubi8:8.10-901.1716482497 5.8s

...

=> exporting to docker image format 35.5s

=> => exporting layers 20.8s

=> => exporting manifest sha256:9b9b6662b7a790c39882f8b4fd22e2b85bd4c419b6f6ffd350e447c35b2650f7 0.0s

=> => exporting config sha256:1a4e6c6559a2d7c39a987537691f67b677640eada5ecdcdcc03ec210f7c672bf 0.0s

=> => sending tarball 14.7s

Loaded image: registry-sbx.it.dbi-services.com/dbi_dctm/linux-ot:8.10-901

INFO[0000] pushing as a reduced-platform image (application/vnd.docker.distribution.manifest.v2+json, sha256:9b9b6662b7a790c39882f8b4fd22e2b85bd4c419b6f6ffd350e447c35b2650f7)

WARN[0000] skipping verifying HTTPS certs for "registry-sbx.it.dbi-services.com"

manifest-sha256:9b9b6662b7a790c39882f8b4fd22e2b85bd4c419b6f6ffd350e447c35b2650f7: done |++++++++++++++++++++++++++++++++++++++|

config-sha256:1a4e6c6559a2d7c39a987537691f67b677640eada5ecdcdcc03ec210f7c672bf: done |++++++++++++++++++++++++++++++++++++++|

elapsed: 42.0s total: 17.1 K (416.0 B/s)

*********************************************************

*** Script completed for 'dbi_dctm/linux-ot:8.10-901' ***

*********************************************************

Mac:linux-ot$

So, in conclusion, there might be a way to make “dockerd” ignore Self-Signed SSL Certificate when it’s used as the embedded container engine inside Rancher Desktop but if it is, then it’s well hidden… If you know about it, don’t hesitate to share. In any cases, switching to “containerd” and “nerdctl” is a possible workaround when you just want to quickly start your development on your internal dev environment without having to worry about the certificate being used. Of course, pushing images with “nerdctl” means ignoring security in this case, which is never a good idea. Therefore, use it with caution and if you have a similar SSL Certificate issue, make sure to fix it as soon as possible so you can use the secure way.

L’article Push images to a Self-Signed Cert registry with Rancher Desktop est apparu en premier sur dbi Blog.

Hogging Threads check in Apache Tomcat

In Apache Tomcat, there is a Stuck Thread Detector Valve which can be enabled pretty easily through the Tomcat “server.xml” file. I talked about that in a previous blog already and provided an AWK parser to extract important details from the logs. However, Tomcat does not have, as far as I know, any notion of Hogging Threads. That’s what I will talk about in this blog.

Hogging ThreadsIf we take a WebLogic Server, as an example, there is a notion of both Hogging and Stuck Threads. There is a pretty short (and not really helpful) explanation of what a Hogging Thread is in the Doc ID 780411.1 and another one in the Doc ID 1517608.1. In summary, WebLogic has Work Managers that keeps track of requests currently being executed and their execution time. Every two seconds, WebLogic will calculate the average time it took to complete requests in the last couple minutes. It will then consider as a Hogging Thread any request that is currently taking more than 7 times the average. Therefore, if the calculated average is 10 seconds, Hogging Threads are requests that takes more than 7*10 = 70 seconds. If it completes before 600 seconds, then that’s it. However, if it stays in execution for more than 600s, then it becomes a Stuck Thread. In short, you can consider Hogging Threads as unusually slow requests.

Tomcat usual monitoringIf you look at most monitoring solutions of Tomcat, it will usually use JMX directly or indirectly through an Application deployed on Tomcat (or a JavaAgent). This will allow to extract existing metrics and use these Out-Of-The-Box values in Prometheus, Grafana, Zabbix, etc… However, this doesn’t really provide much useful information on the ThreadPool (or Executor) MBean. Tomcat only includes details about how many threads are currently busy (doing something) and what’s the maximum number of threads possible (as defined in the “server.xml“). But there is no runtime information or state on the requests… In another blog (damn, that was 8 years ago already!), I talked about a WLST script I wrote to be able to monitor a lot of things from WebLogic Servers and that included much more than what Tomcat gives you. The WebLogic JMX MBean is “ThreadPoolRuntimeMBean” and you can find details about all metrics that WebLogic provides here (example for WebLogic 12c).

Simple logic to implementIf you would like to have a Hogging Thread monitoring, you would need to build it yourself. I assume it should be possible to very closely develop/recreate the WebLogic Work Managers for Tomcat, but you would need Java and Tomcat knowledge to extend the HTTP Servlet or use a custom valve. However, it would modify the behavior of Tomcat and it would most probably have some performance impact as well… To stay simple and still provide something close to it, I thought about doing something like that: since you will need to enable the Stuck Thread Detector Valve to obtain details related to the Stuck Threads, you could set the threshold of this valve to 70/100/120s for example. Then you could consider all detected threads as Hogging from the moment it appears on the Tomcat logs and until it gets completed OR until it becomes a Stuck Thread (600s if we keep it aligned with WebLogic).

WebLogic considers user requests to calculate the average and ignores anything related to internal processing. On Tomcat, you could simulate something close to that by looking at the access log and taking the values from ‘%T‘ (time taken to process the request in seconds) or ‘%F‘ (time taken to commit the response in milliseconds), c.f. the documentation. For example, taking all lines from the last 2 minutes, calculating the average time taken of all these, and multiplying that by 7. That would give you a close-enough behavior to WebLogic. But how do you find Threads running for longer than that (this average changing every time it gets calculated!) without extending Tomcat? The access log will only contain completed requests and not on-going ones… To do that, you will anyway need to extend the HTTP Servlet or use a custom valve, from my point of view.

Example of detection for Hogging ThreadsIn this section, I will use the simple approach I mentioned above, that uses a fixed amount of time to decide if a thread is Hogging or not. Depending on your application, the value to use might be 50, 90, 120 or whatever makes sense to you. Here I will use 120s as a baseline. Therefore, my Tomcat “server.xml” content is as follow:

[tomcat@d2-0 ~]$ grep -A1 Stuck $TOMCAT_HOME/conf/server.xml

<Valve className="org.apache.catalina.valves.StuckThreadDetectionValve"

threshold="120" />

[tomcat@d2-0 ~]$

If I take the AWK parser I shared on this blog, then I would get this kind of outcome:

[tomcat@d2-0 ~]$ cat $TOMCAT_HOME/logs/catalina.out | awk -f parse_stuck.awk

============================================

========== COMPLETED LONG THREADS ==========

============================================

THREAD STIME DTIME DURATION CTIME NUM REQUEST

https-jsse-nio-8080-exec-41 [6/15/24 5:44 AM] 05:46:22,268 [131,487] 05:46:32,271 0 [https://dctm-env.domain.com/D2/x3_portal/loginService]

https-jsse-nio-8080-exec-26 [6/15/24 7:48 AM] 07:50:33,630 [128,939] 07:50:43,633 0 [https://dctm-env.domain.com/D2/x3_portal/creationService]

https-jsse-nio-8080-exec-27 [6/15/24 10:17 AM] 10:19:26,921 [131,451] 10:19:36,925 0 [https://dctm-env.domain.com/D2/x3_portal/doclistService]

https-jsse-nio-8080-exec-1 [6/15/24 10:19 AM] 10:21:36,952 [129,760] 10:21:46,954 0 [https://dctm-env.domain.com/D2/x3_portal/searchManagerService]

https-jsse-nio-8080-exec-26 [6/15/24 11:49 AM] 11:51:43,233 [124,429] 11:51:46,368 0 [https://dctm-env.domain.com/D2/servlet/ExportContent?uid=...]

============================================

======= CURRENT LONG RUNNING THREADS =======

============================================

THREAD STIME DTIME DURATION REQUEST

https-jsse-nio-8080-exec-34 [6/15/24 12:18 AM] 12:20:24,530 [122,150] [https://dctm-env.domain.com/D2/x3_portal/loginService]

https-jsse-nio-8080-exec-66 [6/15/24 12:25 AM] 12:27:10,305 [120,300] [https://dctm-env.domain.com/D2/x3_portal/searchManagerService]

https-jsse-nio-8080-exec-2 [6/15/24 12:30 AM] 12:32:05,982 [121,495] [https://dctm-env.domain.com/D2/x3_portal/creationService]

[tomcat@d2-0 ~]$

On that output, there are 5 threads that took around 130s but that are completed. However, there are still 3 threads that haven’t completed yet. In terms of Stuck and Hogging threads, what matters is what is still currently running. Therefore, we shouldn’t take care of completed ones. What is important for a monitoring is, at least, to receive the number of Stuck/Hogging threads. You might want to include the request URL as well, so you can detect specific issues in case a lot of requests are Stuck/Hogging on the same service. In this blog, I will only care about the number.

For that purpose, let’s simplify a bit the AWK parser, creating a new one with this content (as a reminder, this parser depends on the log format):

[tomcat@d2-0 ~]$ cat thread.awk

/./ {

sub("UTC","");

}

/org.apache.catalina.valves.StuckThreadDetectionValve.notifyStuckThreadDetected/ {

thread=substr($7,2,length($7)-2);

dtime[thread]=$1"_"$2;

tmp=substr($0,index($0,"active for ")+11);

duration[thread]=substr(tmp,1,index(tmp," ")-1);

not_ended[thread]=0;

}

/org.apache.catalina.valves.StuckThreadDetectionValve.notifyStuckThreadCompleted/ {

thread=substr($7,2,length($7)-2);

tmp=substr($0,index($0,"approximately ")+14);

duration[thread]=substr(tmp,1,index(tmp," ")-1);

not_ended[thread]=1;

dtime[thread]="";

duration[thread]=="";

not_ended[thread]=="";

}

END {

for ( i in not_ended ) {

if (not_ended[i]==0) {

printf("%s\t%10s\n",dtime[i],duration[i]);

}

}

}

[tomcat@d2-0 ~]$

To validate it, I executed it on the exact same log file, and I expected only 3 rows, fetching only the detection time and the current duration of the thread. I got exactly what I expected:

[tomcat@d2-0 ~]$ cat $TOMCAT_HOME/logs/catalina.out | awk -f thread.awk

2024-06-15_12:20:24,530 [122,150]

2024-06-15_12:27:10,305 [120,300]

2024-06-15_12:32:05,982 [121,495]

[tomcat@d2-0 ~]$

My goal from here was to find the exact start time of the request and compare it with the current date/time. If the resulting difference is lower than 600, then I would consider the thread as Hogging, otherwise as Stuck. This is because whatever appears on the log has been running since 120s at least, as defined in the threshold of the Stuck Thread Detector Valve. Doing that in bash is pretty easy:

[tomcat@d2-0 ~]$ ls -l thread.*

-rw-------. 1 tomcat tomcat 731 Jun 15 11:28 thread.awk

-rwxr-x---. 1 tomcat tomcat 1200 Jun 15 11:50 thread.sh

[tomcat@d2-0 ~]$

[tomcat@d2-0 ~]$ cat thread.sh

#!/bin/bash

# Variables

script_folder=`which ${0}`

script_folder=`dirname ${script_folder}`

parse_awk="${script_folder}/thread.awk"

input_log="$TOMCAT_HOME/logs/catalina.out"

nb_stuck_threads=0

nb_hogging_threads=0

current_time=`date +%s`

# Parse log file to retrieve long running threads

while read line; do

thread_detection=`echo ${line} | awk -F, '{print $1}' | sed 's,_, ,'`

thread_duration=`echo ${line} | awk -F\[ '{print $2}' | awk -F, '{print $1}'`

thread_detection_epoch=`date -d "${thread_detection} UTC" +%s`

thread_start="$(( ${thread_detection_epoch} - ${thread_duration} ))"

thread_age="$(( ${current_time} - ${thread_start} ))"

if [[ ${thread_age} -ge 600 ]]; then

nb_stuck_threads=$(( nb_stuck_threads + 1 ))

else

nb_hogging_threads=$(( nb_hogging_threads + 1 ))

fi

done < <(cat ${input_log} | awk -f ${parse_awk})

echo "$(date --utc) -- ${nb_stuck_threads} Stuck // ${nb_hogging_threads} Hogging"

[tomcat@d2-0 ~]$

And it’s execution:

[tomcat@d2-0 ~]$ ./thread.sh

Sat Jun 15 12:34:40 UTC 2024 -- 1 Stuck // 2 Hogging

[tomcat@d2-0 ~]$

[tomcat@d2-0 ~]$ ./thread.sh

Sat Jun 15 12:35:21 UTC 2024 -- 2 Stuck // 1 Hogging

[tomcat@d2-0 ~]$

Is that the expected outcome? Yes, there are 3 running threads that were detected between 12:20 and 12:32. At that time, these threads were running since 120 to 122 seconds already, meaning the execution started at, respectively, 12:18:22, 12:25:10 and 12:30:04. Therefore, the first execution of the shell script being at 12:34:40, that means that only the 1st of the 3 threads is over 10 minutes old (16min 18s exactly), the 2 others are still below (9min 30s & 4min 36s respectively). Then, the second execution being at 12:35:21, it means that the middle thread is now 10min 11s old: it’s not a Hogging Thread anymore, but a Stuck Thread.

That’s just a quick example and as mentioned, it could be improved by not only giving the number of threads but instead a mapping of threads vs URLs to detect trends. The output would also depends on the monitoring system that would fetch this data.

L’article Hogging Threads check in Apache Tomcat est apparu en premier sur dbi Blog.

Is DPR compatible with 12.1 databases running on your ODA?

Since patch version 19.21 (current one is 19.23), ODA (Oracle Database Appliance) X7, X8 and X9 will require the use of Data Preserving Reprovisioning (DPR) to get the patch. Unlike traditional patching, DPR erases the system disks but keeps data on ASM/ACFS disks. Data includes databases, ACFS volumes, DB homes, vDisks for DB Systems and VMs. But it doesn’t keep your system settings: specific OS configurations, additional tools, monitoring, users and groups, aso. The reason for not being able to use classic patching method: the embedded OS upgrade from Linux 7 to Linux 8. Actually, it’s much easier to setup a brand new system than applying a major patch onto an existing one. But what about your old (and unsupported) Oracle database releases? Let’s find out for 12cR1 databases.

ODA’s hardware and software supportAccording to MOS note Doc ID 2757884.1, only ODAs X7, X8, X9 and X10 series are currently supported. The support is valid if you run at least patch version 19.19. The only database versions supported are 19c in bare metal, and 21c and 19c as DB Systems.

There is no more support for older releases, like 12cR1 for example. But it doesn’t mean that 12cR1 will stop working as soon as you’re deploying patch 19.19 or higher. With classic patching, your old databases will still be OK. Patching with DPR is another story. As old releases are no more supported, you may struggle to plug in back your old databases to the new Linux system. One could advice to upgrade all your databases to 19c prior using DPR, but it’s not so easy for a lot of my clients. Many old databases are legacy and must run for another couple of years. And 12cR1 is the main release that must survive through patching.

Environment and contextI worked on this following example 2 weeks ago:

- an ODA X8-2M running patch 19.18

- patching is done once a year, and this time I need to update to version 19.22 and I must use DPR

- most of the databases are running 19.18

- 4 databases are still running 12cR1 from initial version (19.15)

- these 12cR1 databases were not patched during last year’s update from 19.15 to 19.18 as no more patch existed for 12cR1 in 19.18

There is no reason 12cR1 would stop working after this OS upgrade: 12cR1 is supported (so to say) on Linux 8, as mentioned in the certification matrix on MOS.

DPR preupgradeDPR means reimaging the ODA, but before reimaging, you will need to register the 19.22 patch and update the DCS components. The adequate preupgrade and detach-node operations resides within the patch.

odacli update-repository -f /backup/patch/19_22/oda-sm-19.22.0.0.0-240306-server.zip

sleep 60 ; odacli describe-job -i "16596d34-9b93-4c7d-b502-4ad27804fa69"

...

odacli update-dcsadmin -v 19.22.0.0.0

sleep 120 ; odacli describe-job -i "aed1c8ef-713b-43c2-9355-cb364387dcd0"

...

odacli update-dcscomponents -v 19.22.0.0.0

...

odacli update-dcsagent -v 19.22.0.0.0

sleep 180 ; odacli describe-job -i 1a10900c-2fc7-4535-8f9b-879a43243e66

...Once your ODA has the knowledge of what’s needed for DPR to 19.22, let’s do the preupgrade operation:

odacli create-preupgradereport -bm

Preupgrade will check a lot of prerequisites, but let’s focus on 12cR1:

odacli describe-preupgradereport -i b1f6dddb-c531-45d5-842f-bf3cf10231fe

Upgrade pre-check report

------------------------------------------------------------------------

Job ID: b1f6dddb-c531-45d5-842f-bf3cf10231fe

Description: Run pre-upgrade checks for Bare Metal

Status: FAILED

Created: April 15, 2024 1:47:17 PM CEST

Result: One or more pre-checks failed for [DB]

...

Validate Database Version Failed Version '12.1.0.2.220419' for Please update the database to the

database 'BRCLATST' is lower than minimum supported version or higher

minimum supported version

'12.1.0.2.220719'

...

Validate Database Version Failed Version '12.1.0.2.220419' for Please update the database to the

database 'BRSLTST' is lower than minimum supported version or higher

minimum supported version

'12.1.0.2.220719'

...

Validate Database Version Failed Version '12.1.0.2.220419' for Please update the database to the

database 'BRRTST' is lower than minimum supported version or higher

minimum supported version

'12.1.0.2.220719'

...

Validate Database Version Failed Version '12.1.0.2.220419' for Please update the database to the

database 'BRFTST' is lower than minimum supported version or higher

minimum supported version

'12.1.0.2.220719'

...

Hopefully, my 4 databases are not declared unsupported or not compatible with 19.22. But they are using patch 12.1.0.2.220419 as deployed with ODA version 19.15, and I will need to upgrade them to 12.1.0.2.220719. This is the latest version embedded in the latest ODA patch that supports 12cR1: 19.16. It should be quite easy to apply.

Registering the DB home and apply the patch?I thought I could simply download the DB clone, register it in the repository, and apply the patch, but applying the patch on a DB home will also need the system patch. Because the target version for updating a DB home is an ODA patch number, and metadata inside the system patch makes the link to the associated DB home. No problem to register this old patch, even if you currently run a higher version:

odacli update-repository -f /backup/patch/19_22/odacli-dcs-19.16.0.0.0-220805-DB-12.1.0.2.zip

sleep 60 ; odacli describe-job -i "304762d3-948b-419b-9954-0d402da8304d"

...

odacli update-repository -f /backup/patch/19_22/oda-sm-19.16.0.0.0-220809-server.zip

sleep 60 ; odacli describe-job -i "4e75492b-60d4-4351-9966-80fdec97b312"

...

odacli list-dbhomes

ID Name DB Version DB Edition Home Location Status

---------------------------------------- -------------------- -------------------- ---------- -------------------------------------------------------- ----------

1cbe3f74-2746-4ab0-81f9-2d1b9f5c3d56 OraDB12102_home1 12.1.0.2.220419 EE /u01/app/odaorahome/oracle/product/12.1.0.2/dbhome_1 CONFIGURED

94aba6b1-850b-45d5-b123-c9eade003fa8 OraDB19000_home2 19.18.0.0.230117 EE /u01/app/odaorahome/oracle/product/19.0.0.0/dbhome_2 CONFIGURED

odacli create-prepatchreport -d -i 1cbe3f74-2746-4ab0-81f9-2d1b9f5c3d56 -v 19.16.0.0

sleep 180 ; odacli describe-prepatchreport -i 55a5e235-096d-4d26-b922-4194c361b16f

...

odacli update-dbhome -i 1cbe3f74-2746-4ab0-81f9-2d1b9f5c3d56 -v 19.16.0.0.0 -f

...

Once the update is finished, the old DB home can be safely removed:

odacli list-dbhomes

ID Name DB Version DB Edition Home Location Status

---------------------------------------- -------------------- -------------------- ---------- -------------------------------------------------------- ----------

1cbe3f74-2746-4ab0-81f9-2d1b9f5c3d56 OraDB12102_home1 12.1.0.2.220419 EE /u01/app/odaorahome/oracle/product/12.1.0.2/dbhome_1 CONFIGURED

94aba6b1-850b-45d5-b123-c9eade003fa8 OraDB19000_home2 19.18.0.0.230117 EE /u01/app/odaorahome/oracle/product/19.0.0.0/dbhome_2 CONFIGURED

f715ab11-12a7-4fef-940a-19b71500a93d OraDB12102_home3 12.1.0.2.220719 EE /u01/app/odaorahome/oracle/product/12.1.0.2/dbhome_3 CONFIGURED

odacli delete-dbhome -i 1cbe3f74-2746-4ab0-81f9-2d1b9f5c3d56

odacli describe-job -i 1b1162a5-38d8-439b-af93-aa7246e69ff9

...Let’s retry the preupgrade:

odacli create-preupgradereport -bm

Status is now OK:

odacli describe-preupgradereport -i a2cc67c4-fb43-4556-9e3c-a02b9e53736d

Upgrade pre-check report

------------------------------------------------------------------------

Job ID: a2cc67c4-fb43-4556-9e3c-a02b9e53736d

Description: Run pre-upgrade checks for Bare Metal

Status: SUCCESS

Created: May 27, 2024 2:02:41 PM CEST

Result: All pre-checks succeeded

...

Now let’s do the detach to prepare our ODA for reimaging:

odacli detach-node -all

********************************************************************************

IMPORTANT

********************************************************************************

'odacli detach-node' will bring down the databases and grid services on the

system. The files that belong to the databases, which are stored on ASM or ACFS,

are left intact on the storage. The databases will be started up back after

re-imaging the ODA system using 'odacli restore-node' commands. As a good

precautionary measure, please backup all the databases on the system before you

start this process. Do not store the backup on this ODA machine since the local

file system will be wiped out as part of the re-image.

********************************************************************************

Do you want to continue (yes/no)[no] : yes

odacli describe-job -i "37cbd5df-708f-4631-872d-f574dd1279e0"

Job details

----------------------------------------------------------------

ID: 37cbd5df-708f-4631-872d-f574dd1279e0

Description: Detach node service creation for upgrade

Status: Success

Created: May 27, 2024 2:35:38 PM CEST

Message: On successful job completion, the server archive file will be generated at /opt/oracle/oak/restore/out. Please copy the server archive file outside of the ODA system before re-image

...Detach is OK. You must now copy the content of /opt/oracle/oak/restore/out outside the ODA, for example on a NFS share and/or your local computer. YOU MUST DO THIS BACKUP BEFORE REIMAGING unless you will not be able to use your data anymore. These files are mandatory for attaching again your data to the new system version.

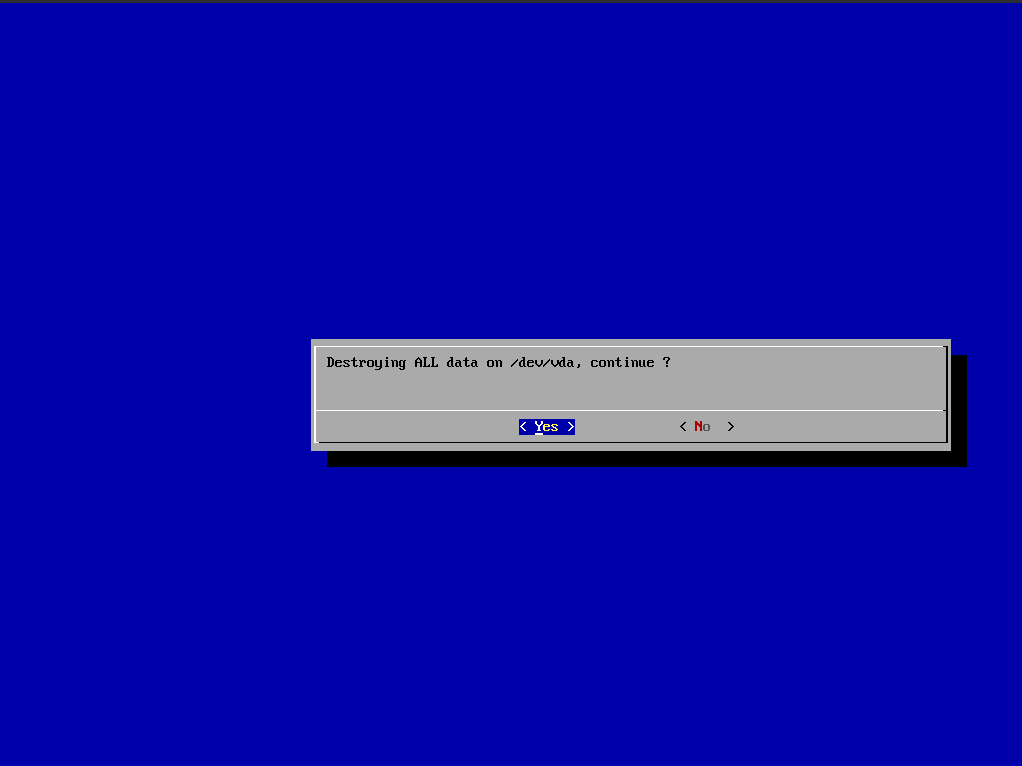

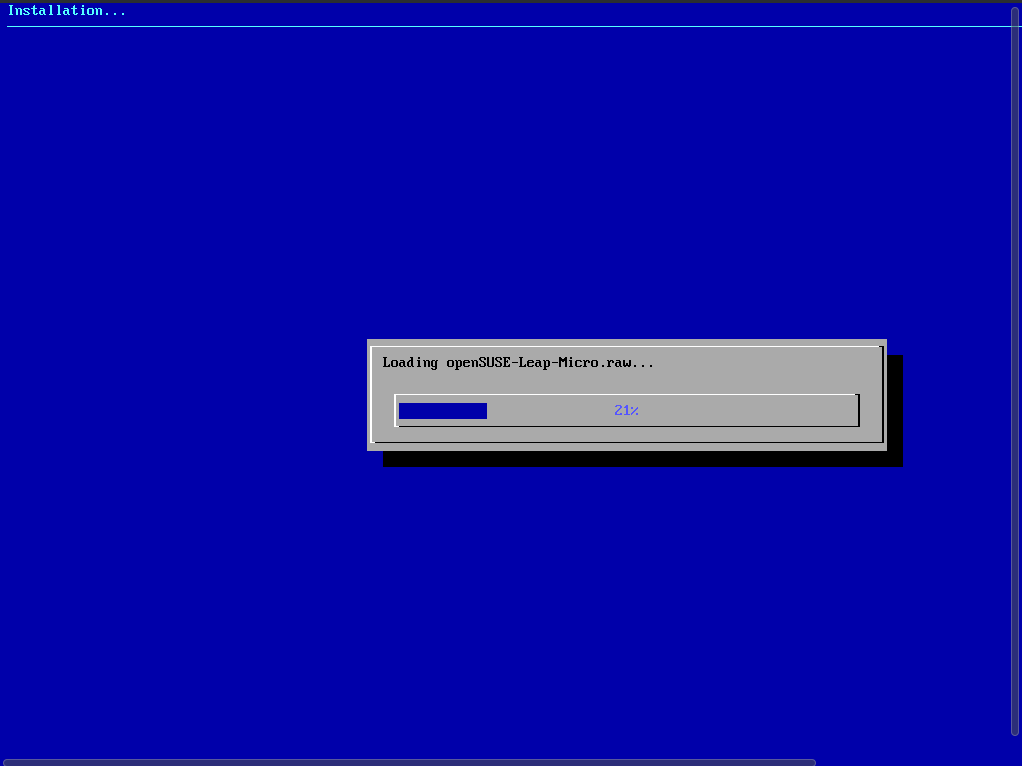

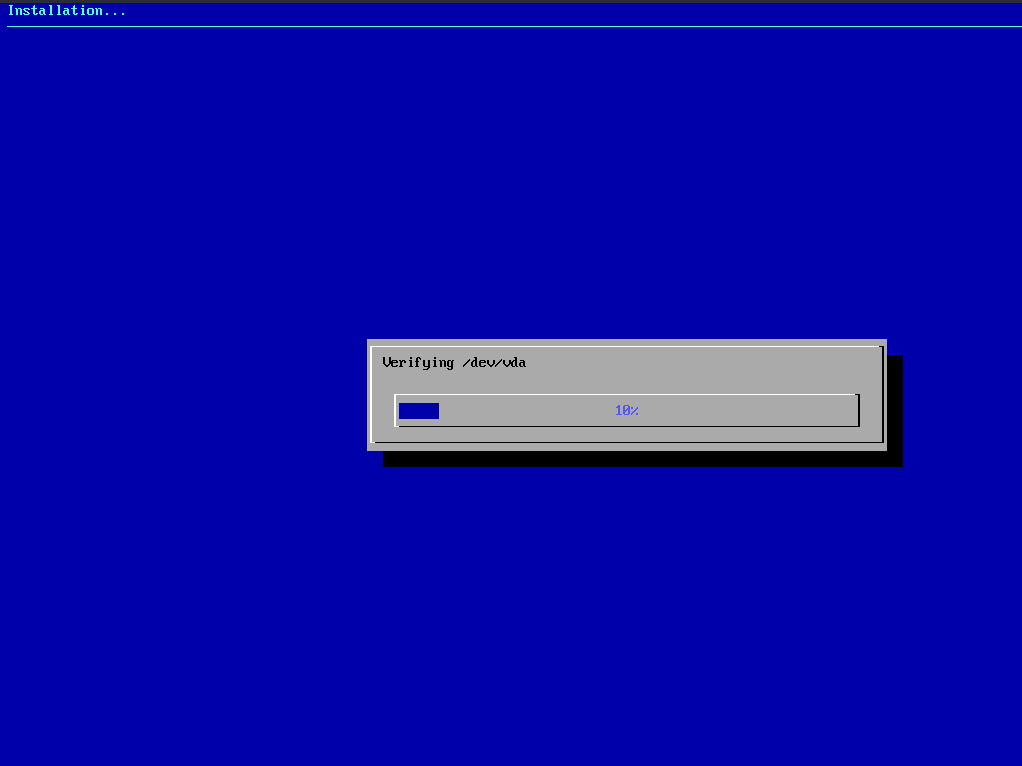

ReimagingReimaging is done through the ILOM as described in the ODA documentation. Just connect the 19.22 ISO under the storage menu of the remote console of the server, define CDROM as the next boot device, and do a power cycle.

Reimaging is an automated process and it takes less than 1 hour to complete.

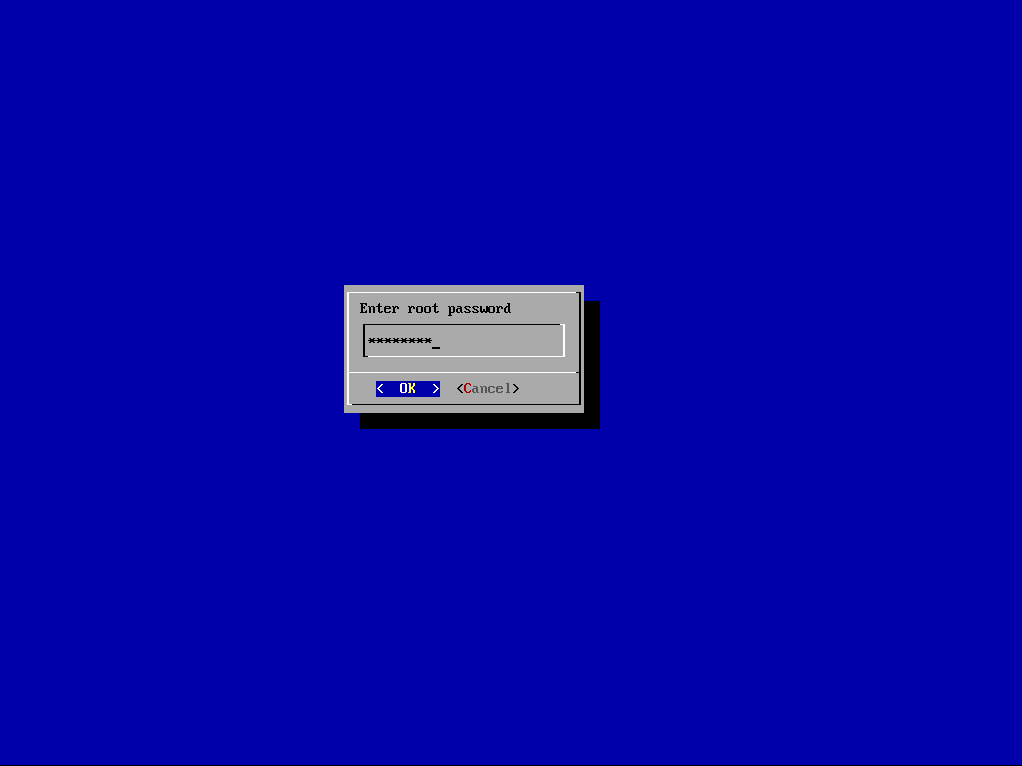

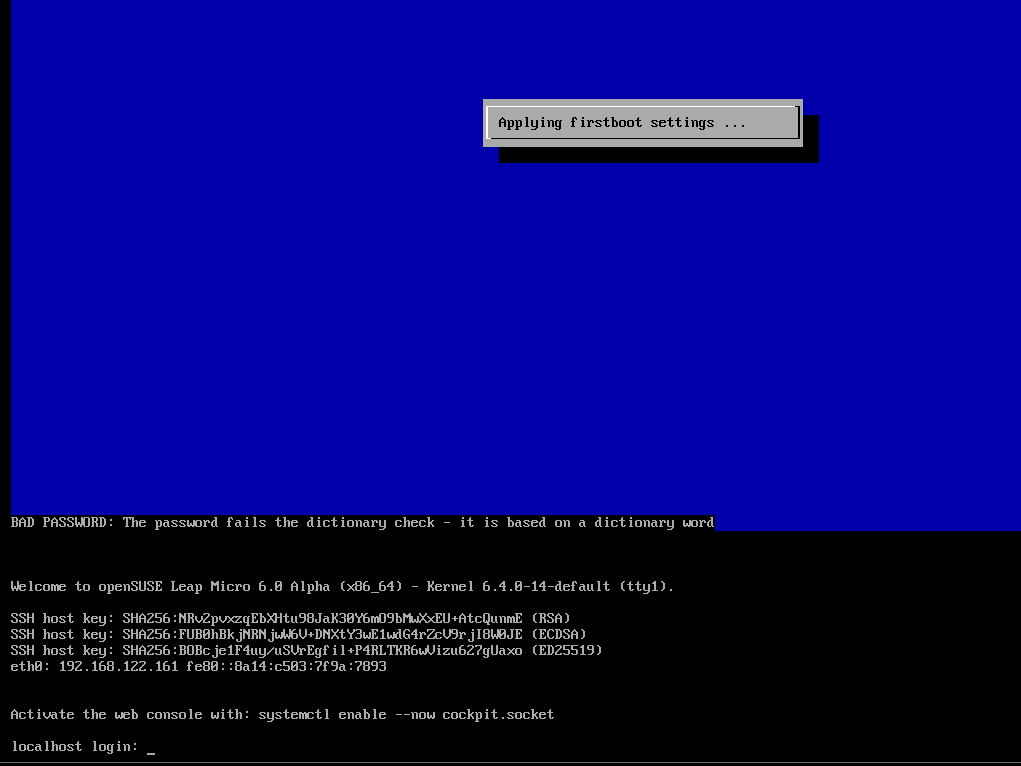

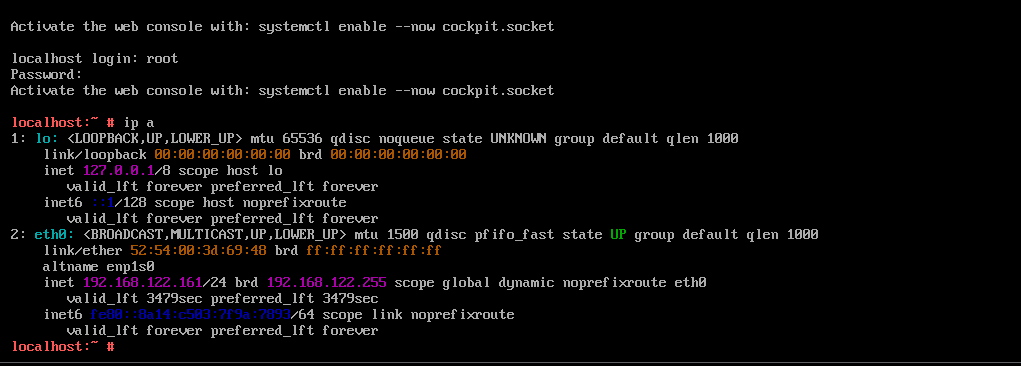

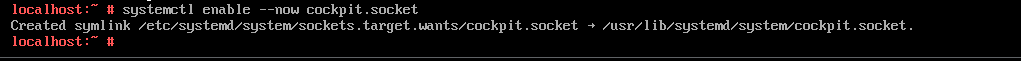

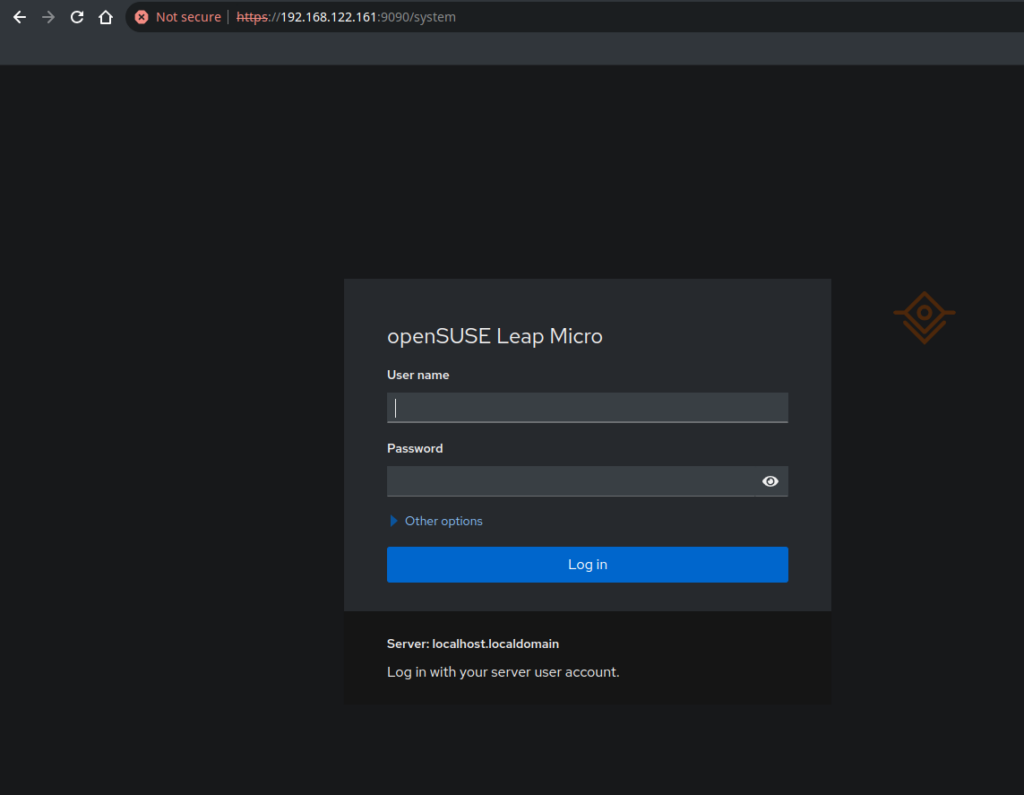

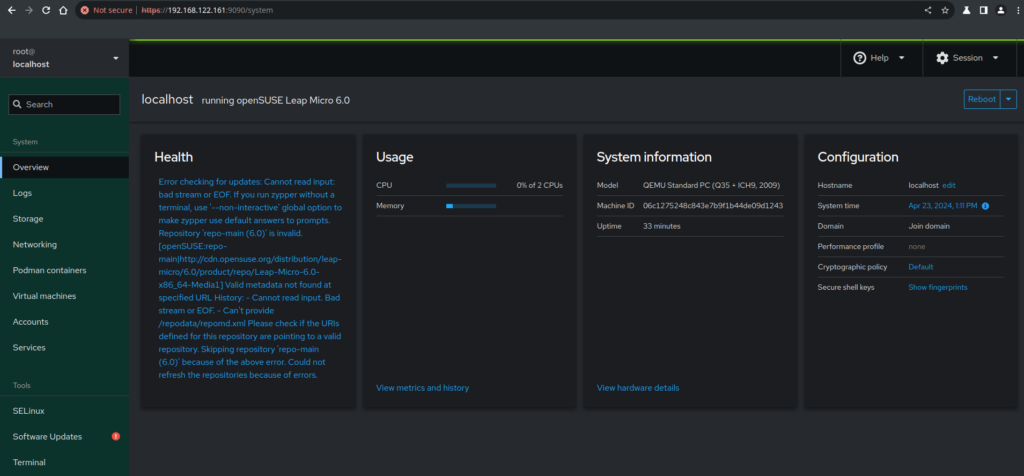

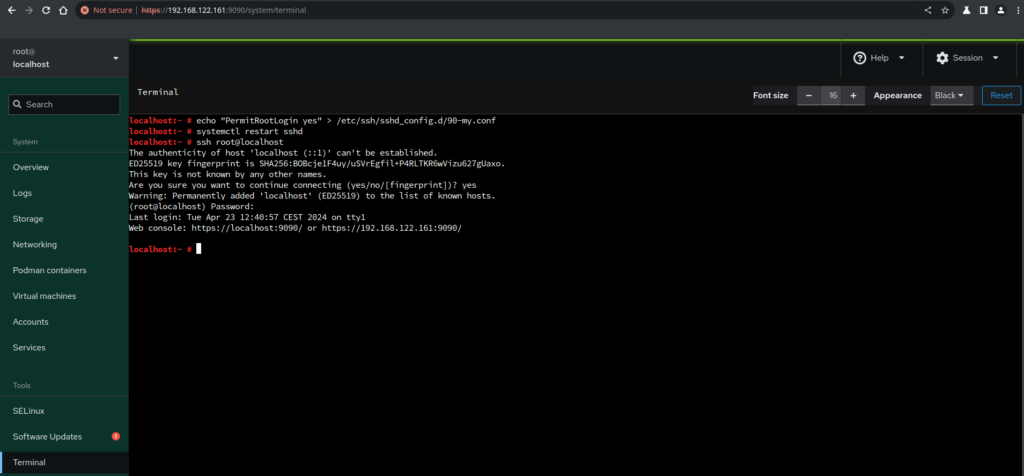

Firstnet configurationOur system is now “brand new”, and you will need to connect with default credentials (root/welcome1) on the remote console to do the firstnet configuration:

configure-firstnet

You can find parameters for this initial network configuration inside the detach zipfile:

cat serverarchive_oratest04/restore/configure-firstnet.rsp

# ------------------------------------------------------------

# This file was generated by the ODA detach-node code flow.

# Don't modify this file

#

# Created On: 2024-05-27 14:35:38

# Version: 19.22.0.0.0

# Feature: Data Preserving

# Re-provisioning

#

# To be used for configure-firstnet post

# reimage

# ------------------------------------------------------------

HOSTNAME=oratest04

INTERFACE_NAME=btbond1

VLAN=NO

IP_ADDR=10.10.32.126

SUBNET_MASK=255.255.255.0

GATEWAY=10.10.32.1Now the server is back online in your network, and you may connect your NFS shares, for example the one where the patchfiles reside:

echo "10.10.32.100:/data/vol_data_490/ODA_backups /backup nfs defaults 0 0" >> /etc/fstab

systemctl daemon-reload

mkdir /backup

mount -aReimaging never updates the firmwares (BIOS, ILOM, etc), you’ll have to update them right now:

odacli describe-component

System Version

---------------

19.22.0.0.0

System node Name

---------------

oak

Local System Version

---------------

19.22.0.0.0

Component Installed Version Available Version

---------------------------------------- -------------------- --------------------

OAK

19.22.0.0.0 up-to-date

DCSCONTROLLER

19.22.0.0.0 up-to-date

DCSCLI

19.22.0.0.0 up-to-date

DCSAGENT

19.22.0.0.0 up-to-date

DCSADMIN

19.22.0.0.0 up-to-date

OS

8.9 up-to-date

ILOM

5.1.0.23.r146986 5.1.3.20.r153596

BIOS

52080100 52110200

LOCAL CONTROLLER FIRMWARE

8000C470 8000D9AB

SHARED CONTROLLER FIRMWARE

VDV1RL05 VDV1RL06

LOCAL DISK FIRMWARE

D3MU001 up-to-date

HMP

2.4.9.2.600 up-to-date

odacli update-repository -f /backup/patch/19_22/Server/oda-sm-19.22.0.0.0-240306-server.zip

sleep 60 ; odacli describe-job -i "b341b9cd-0294-4112-a080-24466a3a13e7"

...

odacli create-prepatchreport -s -v 19.22.0.0.0

sleep 180 ; odacli describe-prepatchreport -i afd38dae-69f7-46a2-abe6-cff15b31ad37

Patch pre-check report

------------------------------------------------------------------------

Job ID: afd38dae-69f7-46a2-abe6-cff15b31ad37

Description: Patch pre-checks for [OS, ILOM, SERVER]

Status: SUCCESS

Created: May 27, 2024 2:35:22 PM UTC

Result: All pre-checks succeeded

...

odacli update-server -v 19.22.0.0.0

odacli describe-job -i "3bf82e72-eda6-4e03-b363-ee872b7ca8e8"

Job details

----------------------------------------------------------------

Job ID: 3bf82e72-eda6-4e03-b363-ee872b7ca8e8

Description: Server Patching

Status: Success

Created: May 27, 2024 2:37:02 PM UTC

Message:

...Server reboots at the end of this update. Note that you may need to wait several minutes after the reboot to see BIOS and ILOM updated.

odacli describe-component

System Version

---------------

19.22.0.0.0

System node Name

---------------

oak

Local System Version

---------------

19.22.0.0.0

Component Installed Version Available Version

---------------------------------------- -------------------- --------------------

OAK

19.22.0.0.0 up-to-date

DCSCONTROLLER

19.22.0.0.0 up-to-date

DCSCLI

19.22.0.0.0 up-to-date

DCSAGENT

19.22.0.0.0 up-to-date

DCSADMIN

19.22.0.0.0 up-to-date

OS

8.9 up-to-date

ILOM

5.1.3.20.r153596 up-to-date

BIOS

52110200 up-to-date

LOCAL CONTROLLER FIRMWARE

8000D9AB up-to-date

SHARED CONTROLLER FIRMWARE

VDV1RL05 VDV1RL06

LOCAL DISK FIRMWARE

D3MU001 up-to-date

HMP

2.4.9.2.600 up-to-dateNow let’s patch the storage:

odacli update-storage -v 19.22.0.0.0

odacli describe-job -i f829abeb-5755-491c-9463-825b0eca5409

...

The server reboots again, then checking the version shows that everything is “up-to-date”:

odacli describe-component

System Version

---------------

19.22.0.0.0

System node Name

---------------

oak

Local System Version

---------------

19.22.0.0.0

Component Installed Version Available Version

---------------------------------------- -------------------- --------------------

OAK

19.22.0.0.0 up-to-date

DCSCONTROLLER

19.22.0.0.0 up-to-date

DCSCLI

19.22.0.0.0 up-to-date

DCSAGENT

19.22.0.0.0 up-to-date

DCSADMIN

19.22.0.0.0 up-to-date

OS

8.9 up-to-date

ILOM

5.1.3.20.r153596 up-to-date

BIOS

52110200 up-to-date

LOCAL CONTROLLER FIRMWARE

8000D9AB up-to-date

SHARED CONTROLLER FIRMWARE

VDV1RL06 up-to-date

LOCAL DISK FIRMWARE

D3MU001 up-to-date

HMP

2.4.9.2.600 up-to-date

Let’s register the GI clone version 19.22 as well as the detach files, then restore the ODA configuration:

odacli update-repository -f /backup/patch/19_22/Grid/odacli-dcs-19.22.0.0.0-240306-GI-19.22.0.0.zip

odacli describe-job -i "d8b33efb-9f66-461c-89ea-f0db33e22cba"

...

odacli update-repository -f /backup/patch/19_22/oratest04/root/oda_nodes/serverarchive_oratest04.zip

odacli describe-job -i "e0c5b0cf-86bb-4961-9861-1ef598b9d6d9"

...

odacli restore-node -g

Enter New system password: *************

Retype New system password: *************

Enter an initial password for Web Console account (oda-admin): *************

Retype the password for Web Console account (oda-admin): *************

User 'oda-admin' created successfully...

odacli describe-job -i 3f2aed36-b654-4df5-ab63-23a740adb5cc

...Restore node takes 25+ minutes.

Restore node – databasesNow it’s time to restore our databases:

odacli restore-node -d

odacli describe-job -i "0081ba7a-f987-40ef-95af-62654c18e354"

Job details

----------------------------------------------------------------

Job ID: 0081ba7a-f987-40ef-95af-62654c18e354

Description: Restore node service - DB

Status: Success

Created: May 27, 2024 5:51:25 PM CEST

Message:

Task Name Start Time End Time Status

---------------------------------------- ---------------------------------------- ---------------------------------------- -------

Setting up SSH equivalence for 'oracle' May 27, 2024 5:51:29 PM CEST May 27, 2024 5:51:30 PM CEST Success

Restore BM CPU Pools May 27, 2024 5:51:30 PM CEST May 27, 2024 5:51:30 PM CEST Success

Register DB home: OraDB19000_home2 May 27, 2024 5:51:30 PM CEST May 27, 2024 5:51:33 PM CEST Success

Register DB home: OraDB12102_home3 May 27, 2024 5:51:33 PM CEST May 27, 2024 5:51:57 PM CEST Success

Persist database storage locations May 27, 2024 5:51:57 PM CEST May 27, 2024 5:51:57 PM CEST Success

Save metadata for MCHDEV May 27, 2024 5:51:57 PM CEST May 27, 2024 5:51:57 PM CEST Success

Save metadata for MCHREF May 27, 2024 5:51:57 PM CEST May 27, 2024 5:51:57 PM CEST Success

Save metadata for LV01DEV May 27, 2024 5:51:57 PM CEST May 27, 2024 5:51:57 PM CEST Success

Save metadata for LV02DEV May 27, 2024 5:51:57 PM CEST May 27, 2024 5:51:57 PM CEST Success

Save metadata for MCHTST May 27, 2024 5:51:57 PM CEST May 27, 2024 5:51:57 PM CEST Success

Save metadata for LV02TST May 27, 2024 5:51:57 PM CEST May 27, 2024 5:51:57 PM CEST Success

Save metadata for MCHBAC May 27, 2024 5:51:57 PM CEST May 27, 2024 5:51:57 PM CEST Success

Save metadata for BRRTST May 27, 2024 5:51:57 PM CEST May 27, 2024 5:51:57 PM CEST Success

Save metadata for LV01TST May 27, 2024 5:51:57 PM CEST May 27, 2024 5:51:57 PM CEST Success

Save metadata for DBTEST May 27, 2024 5:51:57 PM CEST May 27, 2024 5:51:57 PM CEST Success

Save metadata for KGED19TS May 27, 2024 5:51:57 PM CEST May 27, 2024 5:51:57 PM CEST Success

Save metadata for BRFTST May 27, 2024 5:51:57 PM CEST May 27, 2024 5:51:57 PM CEST Success

Save metadata for BRSLTST May 27, 2024 5:51:57 PM CEST May 27, 2024 5:51:57 PM CEST Success

Save metadata for BRCLATST May 27, 2024 5:51:57 PM CEST May 27, 2024 5:51:57 PM CEST Success

Persist database storages May 27, 2024 5:51:57 PM CEST May 27, 2024 5:51:57 PM CEST Skipped

Restore database: MCHDEV May 27, 2024 5:51:57 PM CEST May 27, 2024 5:53:03 PM CEST Success

+-- Adding database to GI May 27, 2024 5:51:57 PM CEST May 27, 2024 5:51:59 PM CEST Success

+-- Adding database instance(s) to GI May 27, 2024 5:51:59 PM CEST May 27, 2024 5:51:59 PM CEST Success

+-- Modifying SPFILE for database May 27, 2024 5:51:59 PM CEST May 27, 2024 5:52:33 PM CEST Success

+-- Restore password file for database May 27, 2024 5:52:33 PM CEST May 27, 2024 5:52:33 PM CEST Skipped

+-- Start instance(s) for database May 27, 2024 5:52:33 PM CEST May 27, 2024 5:52:49 PM CEST Success

+-- Persist metadata for database May 27, 2024 5:52:49 PM CEST May 27, 2024 5:52:49 PM CEST Success

+-- Create adrci directory May 27, 2024 5:52:49 PM CEST May 27, 2024 5:52:49 PM CEST Success

+-- Run SqlPatch May 27, 2024 5:52:49 PM CEST May 27, 2024 5:53:03 PM CEST Success

Restore database: MCHREF May 27, 2024 5:53:03 PM CEST May 27, 2024 5:54:03 PM CEST Success

+-- Adding database to GI May 27, 2024 5:53:03 PM CEST May 27, 2024 5:53:04 PM CEST Success

+-- Adding database instance(s) to GI May 27, 2024 5:53:04 PM CEST May 27, 2024 5:53:04 PM CEST Success

+-- Modifying SPFILE for database May 27, 2024 5:53:04 PM CEST May 27, 2024 5:53:38 PM CEST Success

+-- Restore password file for database May 27, 2024 5:53:39 PM CEST May 27, 2024 5:53:39 PM CEST Skipped

+-- Start instance(s) for database May 27, 2024 5:53:39 PM CEST May 27, 2024 5:53:54 PM CEST Success

+-- Persist metadata for database May 27, 2024 5:53:54 PM CEST May 27, 2024 5:53:54 PM CEST Success

+-- Create adrci directory May 27, 2024 5:53:54 PM CEST May 27, 2024 5:53:54 PM CEST Success

+-- Run SqlPatch May 27, 2024 5:53:54 PM CEST May 27, 2024 5:54:03 PM CEST Success

Restore database: LV01DEV May 27, 2024 5:54:03 PM CEST May 27, 2024 5:55:15 PM CEST Success

+-- Adding database to GI May 27, 2024 5:54:03 PM CEST May 27, 2024 5:54:05 PM CEST Success

+-- Adding database instance(s) to GI May 27, 2024 5:54:05 PM CEST May 27, 2024 5:54:05 PM CEST Success

+-- Modifying SPFILE for database May 27, 2024 5:54:05 PM CEST May 27, 2024 5:54:40 PM CEST Success

+-- Restore password file for database May 27, 2024 5:54:40 PM CEST May 27, 2024 5:54:40 PM CEST Skipped

+-- Start instance(s) for database May 27, 2024 5:54:40 PM CEST May 27, 2024 5:54:55 PM CEST Success

+-- Persist metadata for database May 27, 2024 5:54:55 PM CEST May 27, 2024 5:54:55 PM CEST Success

+-- Create adrci directory May 27, 2024 5:54:55 PM CEST May 27, 2024 5:54:56 PM CEST Success

+-- Run SqlPatch May 27, 2024 5:54:56 PM CEST May 27, 2024 5:55:15 PM CEST Success

Restore database: LV02DEV May 27, 2024 5:55:15 PM CEST May 27, 2024 5:56:29 PM CEST Success

+-- Adding database to GI May 27, 2024 5:55:15 PM CEST May 27, 2024 5:55:17 PM CEST Success

+-- Adding database instance(s) to GI May 27, 2024 5:55:17 PM CEST May 27, 2024 5:55:17 PM CEST Success

+-- Modifying SPFILE for database May 27, 2024 5:55:17 PM CEST May 27, 2024 5:55:51 PM CEST Success

+-- Restore password file for database May 27, 2024 5:55:51 PM CEST May 27, 2024 5:55:51 PM CEST Skipped

+-- Start instance(s) for database May 27, 2024 5:55:51 PM CEST May 27, 2024 5:56:07 PM CEST Success

+-- Persist metadata for database May 27, 2024 5:56:07 PM CEST May 27, 2024 5:56:07 PM CEST Success

+-- Create adrci directory May 27, 2024 5:56:07 PM CEST May 27, 2024 5:56:07 PM CEST Success

+-- Run SqlPatch May 27, 2024 5:56:07 PM CEST May 27, 2024 5:56:29 PM CEST Success

Restore database: MCHTST May 27, 2024 5:56:29 PM CEST May 27, 2024 5:57:30 PM CEST Success

+-- Adding database to GI May 27, 2024 5:56:29 PM CEST May 27, 2024 5:56:30 PM CEST Success

+-- Adding database instance(s) to GI May 27, 2024 5:56:30 PM CEST May 27, 2024 5:56:30 PM CEST Success

+-- Modifying SPFILE for database May 27, 2024 5:56:30 PM CEST May 27, 2024 5:57:05 PM CEST Success

+-- Restore password file for database May 27, 2024 5:57:05 PM CEST May 27, 2024 5:57:05 PM CEST Skipped

+-- Start instance(s) for database May 27, 2024 5:57:05 PM CEST May 27, 2024 5:57:20 PM CEST Success

+-- Persist metadata for database May 27, 2024 5:57:20 PM CEST May 27, 2024 5:57:20 PM CEST Success

+-- Create adrci directory May 27, 2024 5:57:20 PM CEST May 27, 2024 5:57:21 PM CEST Success

+-- Run SqlPatch May 27, 2024 5:57:21 PM CEST May 27, 2024 5:57:30 PM CEST Success

Restore database: LV02TST May 27, 2024 5:57:30 PM CEST May 27, 2024 5:58:30 PM CEST Success

+-- Adding database to GI May 27, 2024 5:57:30 PM CEST May 27, 2024 5:57:32 PM CEST Success

+-- Adding database instance(s) to GI May 27, 2024 5:57:32 PM CEST May 27, 2024 5:57:32 PM CEST Success

+-- Modifying SPFILE for database May 27, 2024 5:57:32 PM CEST May 27, 2024 5:58:06 PM CEST Success

+-- Restore password file for database May 27, 2024 5:58:06 PM CEST May 27, 2024 5:58:06 PM CEST Skipped

+-- Start instance(s) for database May 27, 2024 5:58:06 PM CEST May 27, 2024 5:58:22 PM CEST Success

+-- Persist metadata for database May 27, 2024 5:58:22 PM CEST May 27, 2024 5:58:22 PM CEST Success

+-- Create adrci directory May 27, 2024 5:58:22 PM CEST May 27, 2024 5:58:22 PM CEST Success

+-- Run SqlPatch May 27, 2024 5:58:22 PM CEST May 27, 2024 5:58:30 PM CEST Success

Restore database: MCHBAC May 27, 2024 5:58:30 PM CEST May 27, 2024 5:59:41 PM CEST Success

+-- Adding database to GI May 27, 2024 5:58:30 PM CEST May 27, 2024 5:58:32 PM CEST Success

+-- Adding database instance(s) to GI May 27, 2024 5:58:32 PM CEST May 27, 2024 5:58:32 PM CEST Success

+-- Modifying SPFILE for database May 27, 2024 5:58:32 PM CEST May 27, 2024 5:59:07 PM CEST Success

+-- Restore password file for database May 27, 2024 5:59:07 PM CEST May 27, 2024 5:59:07 PM CEST Skipped

+-- Start instance(s) for database May 27, 2024 5:59:07 PM CEST May 27, 2024 5:59:23 PM CEST Success

+-- Persist metadata for database May 27, 2024 5:59:23 PM CEST May 27, 2024 5:59:23 PM CEST Success

+-- Create adrci directory May 27, 2024 5:59:24 PM CEST May 27, 2024 5:59:24 PM CEST Success

+-- Run SqlPatch May 27, 2024 5:59:24 PM CEST May 27, 2024 5:59:41 PM CEST Success

Restore database: BRRTST May 27, 2024 5:59:41 PM CEST May 27, 2024 6:01:24 PM CEST Success

+-- Adding database to GI May 27, 2024 5:59:41 PM CEST May 27, 2024 5:59:42 PM CEST Success

+-- Adding database instance(s) to GI May 27, 2024 5:59:42 PM CEST May 27, 2024 5:59:42 PM CEST Success

+-- Modifying SPFILE for database May 27, 2024 5:59:42 PM CEST May 27, 2024 6:00:16 PM CEST Success

+-- Restore password file for database May 27, 2024 6:00:16 PM CEST May 27, 2024 6:00:16 PM CEST Skipped

+-- Start instance(s) for database May 27, 2024 6:00:16 PM CEST May 27, 2024 6:00:38 PM CEST Success

+-- Persist metadata for database May 27, 2024 6:00:38 PM CEST May 27, 2024 6:00:38 PM CEST Success

+-- Create adrci directory May 27, 2024 6:00:38 PM CEST May 27, 2024 6:00:39 PM CEST Success

+-- Run SqlPatch May 27, 2024 6:00:39 PM CEST May 27, 2024 6:01:24 PM CEST Success

Restore database: LV01TST May 27, 2024 6:01:24 PM CEST May 27, 2024 6:02:40 PM CEST Success

+-- Adding database to GI May 27, 2024 6:01:24 PM CEST May 27, 2024 6:01:26 PM CEST Success

+-- Adding database instance(s) to GI May 27, 2024 6:01:26 PM CEST May 27, 2024 6:01:26 PM CEST Success

+-- Modifying SPFILE for database May 27, 2024 6:01:26 PM CEST May 27, 2024 6:02:01 PM CEST Success

+-- Restore password file for database May 27, 2024 6:02:01 PM CEST May 27, 2024 6:02:01 PM CEST Skipped

+-- Start instance(s) for database May 27, 2024 6:02:01 PM CEST May 27, 2024 6:02:18 PM CEST Success

+-- Persist metadata for database May 27, 2024 6:02:18 PM CEST May 27, 2024 6:02:18 PM CEST Success

+-- Create adrci directory May 27, 2024 6:02:18 PM CEST May 27, 2024 6:02:18 PM CEST Success

+-- Run SqlPatch May 27, 2024 6:02:18 PM CEST May 27, 2024 6:02:40 PM CEST Success

Restore database: DBTEST May 27, 2024 6:02:40 PM CEST May 27, 2024 6:03:59 PM CEST Success

+-- Adding database to GI May 27, 2024 6:02:40 PM CEST May 27, 2024 6:02:42 PM CEST Success

+-- Adding database instance(s) to GI May 27, 2024 6:02:42 PM CEST May 27, 2024 6:02:42 PM CEST Success

+-- Modifying SPFILE for database May 27, 2024 6:02:42 PM CEST May 27, 2024 6:03:17 PM CEST Success

+-- Restore password file for database May 27, 2024 6:03:17 PM CEST May 27, 2024 6:03:17 PM CEST Skipped

+-- Start instance(s) for database May 27, 2024 6:03:17 PM CEST May 27, 2024 6:03:34 PM CEST Success

+-- Persist metadata for database May 27, 2024 6:03:34 PM CEST May 27, 2024 6:03:34 PM CEST Success

+-- Create adrci directory May 27, 2024 6:03:35 PM CEST May 27, 2024 6:03:35 PM CEST Success

+-- Run SqlPatch May 27, 2024 6:03:35 PM CEST May 27, 2024 6:03:59 PM CEST Success

Restore database: KGED19TS May 27, 2024 6:03:59 PM CEST May 27, 2024 6:06:32 PM CEST Success

+-- Adding database to GI May 27, 2024 6:03:59 PM CEST May 27, 2024 6:04:02 PM CEST Success

+-- Adding database instance(s) to GI May 27, 2024 6:04:02 PM CEST May 27, 2024 6:04:02 PM CEST Success

+-- Modifying SPFILE for database May 27, 2024 6:04:02 PM CEST May 27, 2024 6:04:37 PM CEST Success

+-- Restore password file for database May 27, 2024 6:04:37 PM CEST May 27, 2024 6:04:38 PM CEST Skipped

+-- Start instance(s) for database May 27, 2024 6:04:38 PM CEST May 27, 2024 6:04:57 PM CEST Success

+-- Persist metadata for database May 27, 2024 6:04:58 PM CEST May 27, 2024 6:04:58 PM CEST Success

+-- Create adrci directory May 27, 2024 6:04:58 PM CEST May 27, 2024 6:04:58 PM CEST Success

+-- Run SqlPatch May 27, 2024 6:04:58 PM CEST May 27, 2024 6:06:32 PM CEST Success

Restore database: BRFTST May 27, 2024 6:06:32 PM CEST May 27, 2024 6:08:28 PM CEST Success

+-- Adding database to GI May 27, 2024 6:06:32 PM CEST May 27, 2024 6:06:34 PM CEST Success

+-- Adding database instance(s) to GI May 27, 2024 6:06:34 PM CEST May 27, 2024 6:06:34 PM CEST Success

+-- Modifying SPFILE for database May 27, 2024 6:06:35 PM CEST May 27, 2024 6:07:09 PM CEST Success

+-- Restore password file for database May 27, 2024 6:07:09 PM CEST May 27, 2024 6:07:09 PM CEST Skipped

+-- Start instance(s) for database May 27, 2024 6:07:09 PM CEST May 27, 2024 6:07:33 PM CEST Success

+-- Persist metadata for database May 27, 2024 6:07:33 PM CEST May 27, 2024 6:07:33 PM CEST Success

+-- Create adrci directory May 27, 2024 6:07:33 PM CEST May 27, 2024 6:07:33 PM CEST Success

+-- Run SqlPatch May 27, 2024 6:07:33 PM CEST May 27, 2024 6:08:28 PM CEST Success

Restore database: BRSLTST May 27, 2024 6:08:28 PM CEST May 27, 2024 6:10:16 PM CEST Success

+-- Adding database to GI May 27, 2024 6:08:28 PM CEST May 27, 2024 6:08:30 PM CEST Success

+-- Adding database instance(s) to GI May 27, 2024 6:08:31 PM CEST May 27, 2024 6:08:31 PM CEST Success

+-- Modifying SPFILE for database May 27, 2024 6:08:31 PM CEST May 27, 2024 6:09:05 PM CEST Success

+-- Restore password file for database May 27, 2024 6:09:05 PM CEST May 27, 2024 6:09:05 PM CEST Skipped

+-- Start instance(s) for database May 27, 2024 6:09:05 PM CEST May 27, 2024 6:09:27 PM CEST Success

+-- Persist metadata for database May 27, 2024 6:09:27 PM CEST May 27, 2024 6:09:27 PM CEST Success

+-- Create adrci directory May 27, 2024 6:09:27 PM CEST May 27, 2024 6:09:27 PM CEST Success

+-- Run SqlPatch May 27, 2024 6:09:27 PM CEST May 27, 2024 6:10:16 PM CEST Success

Restore database: BRCLATST May 27, 2024 6:10:16 PM CEST May 27, 2024 6:12:13 PM CEST Success

+-- Adding database to GI May 27, 2024 6:10:17 PM CEST May 27, 2024 6:10:18 PM CEST Success

+-- Adding database instance(s) to GI May 27, 2024 6:10:18 PM CEST May 27, 2024 6:10:18 PM CEST Success

+-- Modifying SPFILE for database May 27, 2024 6:10:19 PM CEST May 27, 2024 6:10:53 PM CEST Success

+-- Restore password file for database May 27, 2024 6:10:54 PM CEST May 27, 2024 6:10:54 PM CEST Skipped

+-- Start instance(s) for database May 27, 2024 6:10:54 PM CEST May 27, 2024 6:11:16 PM CEST Success

+-- Persist metadata for database May 27, 2024 6:11:16 PM CEST May 27, 2024 6:11:16 PM CEST Success

+-- Create adrci directory May 27, 2024 6:11:16 PM CEST May 27, 2024 6:11:16 PM CEST Success

+-- Run SqlPatch May 27, 2024 6:11:16 PM CEST May 27, 2024 6:12:13 PM CEST Success

Restore Object Stores May 27, 2024 6:12:13 PM CEST May 27, 2024 6:12:13 PM CEST Success

Remount NFS backups May 27, 2024 6:12:13 PM CEST May 27, 2024 6:12:13 PM CEST Success

Restore BackupConfigs May 27, 2024 6:12:13 PM CEST May 27, 2024 6:12:13 PM CEST Success

Reattach backupconfigs to DBs May 27, 2024 6:12:13 PM CEST May 27, 2024 6:12:13 PM CEST Success

Restore backup reports May 27, 2024 6:12:13 PM CEST May 27, 2024 6:12:13 PM CEST SuccessOur 12cR1 databases are restored without any problem, as well as 19c ones.

Next steps when using DPRYour 12.1 databases will never get another update, but it does not apply to 19c databases. Using DPR will not update your 19.18 databases to 19.22: you need to apply classic patching method to update them. Download the 19.22 DB clone, register this clone into the ODA repository and do the prepatch report on your first 19c DB home. Then patch the DB home to 19.22:

odacli update-repository -f /backup/patch/19_22/DB/odacli-dcs-19.22.0.0.0-240306-DB-19.22.0.0.zip

...

odacli create-prepatchreport -d -i 84cb4f9a-7c45-432b-9e4a-c58f2fe53ca0 -v 19.22.0.0.0

...

odacli describe-prepatchreport -i a9811a90-0f62-4648-86e5-c9a5adaf1be5

...

odacli update-dbhome -i 84cb4f9a-7c45-432b-9e4a-c58f2fe53ca0 -v 19.22.0.0.0 -f

...As all databases from this 19.18 are now linked to a new 19.22 DB home, the old DB home is not needed anymore, let’s remove it:

odacli delete-dbhome -i 84cb4f9a-7c45-432b-9e4a-c58f2fe53ca0

odacli describe-job -i 814d1792-95a6-4592-90b5-42d0b271917b

...

odacli list-dbhomes

ID Name DB Version DB Edition Home Location Status

---------------------------------------- -------------------- -------------------- ---------- -------------------------------------------------------- ----------

da2a5bef-9e79-49e2-b811-b7a8b9283bd9 OraDB12102_home3 12.1.0.2.220719 EE /u01/app/odaorahome/oracle/product/12.1.0.2/dbhome_3 CONFIGURED

f221039f-1430-488e-b853-e3a3fc518020 OraDB19000_home3 19.22.0.0.240116 EE /u01/app/odaorahome/oracle/product/19.0.0.0/dbhome_3 CONFIGUREDIf you have multiple 19c DB homes, you will need to repeat this update for each DB home.

If your ODA is also running VMs and/or DB Systems, you will need to do a restore-node -kvm then a restore-node -dbs.

ConclusionKeeping 12cR1 is still possible when upgrading to 19.21 or later, but you should know that it’s because there is no reason for Oracle to remove your existing DB homes and databases. For a fresh setup without using DPR, I would recommend putting old DB homes and databases inside a VM. Unlike DB Systems, VM content is not managed by odacli, therefore you can use whatever OS and whatever Oracle release you need. Without any support of any kind, for sure.

L’article Is DPR compatible with 12.1 databases running on your ODA? est apparu en premier sur dbi Blog.

CloudNativePG – Scaling up and down

By now, if you followed the previous posts (here, here, here, here and here), we know quite a bit about how to use CloudNativePG to deploy a PostgreSQL cluster and how to get detailed information about the deployment. What we’ll look at in this post is how you can leverage this deployment to scale the cluster up and down. This might be important if you have changing workloads throughout the day or the week and your application is able to distribute read only workloads across the PostgreSQL replicas.

When we look at what we have now, we do see this:

minicube@micro-minicube:~> kubectl-cnpg status my-pg-cluster

Cluster Summary

Name: my-pg-cluster

Namespace: default

System ID: 7378131726640287762

PostgreSQL Image: ghcr.io/cloudnative-pg/postgresql:16.2

Primary instance: my-pg-cluster-1

Primary start time: 2024-06-08 13:59:26 +0000 UTC (uptime 88h35m7s)

Status: Cluster in healthy state

Instances: 3

Ready instances: 3

Current Write LSN: 0/26000000 (Timeline: 1 - WAL File: 000000010000000000000012)

Certificates Status

Certificate Name Expiration Date Days Left Until Expiration

---------------- --------------- --------------------------

my-pg-cluster-ca 2024-09-06 13:54:17 +0000 UTC 86.31

my-pg-cluster-replication 2024-09-06 13:54:17 +0000 UTC 86.31

my-pg-cluster-server 2024-09-06 13:54:17 +0000 UTC 86.31

Continuous Backup status

Not configured

Physical backups

No running physical backups found

Streaming Replication status

Replication Slots Enabled

Name Sent LSN Write LSN Flush LSN Replay LSN Write Lag Flush Lag Replay Lag State Sync State Sync Priority Replication Slot

---- -------- --------- --------- ---------- --------- --------- ---------- ----- ---------- ------------- ----------------

my-pg-cluster-2 0/26000000 0/26000000 0/26000000 0/26000000 00:00:00 00:00:00 00:00:00 streaming async 0 active

my-pg-cluster-3 0/26000000 0/26000000 0/26000000 0/26000000 00:00:00 00:00:00 00:00:00 streaming async 0 active

Unmanaged Replication Slot Status

No unmanaged replication slots found

Managed roles status

No roles managed

Tablespaces status

No managed tablespaces

Pod Disruption Budgets status

Name Role Expected Pods Current Healthy Minimum Desired Healthy Disruptions Allowed

---- ---- ------------- --------------- ----------------------- -------------------

my-pg-cluster replica 2 2 1 1

my-pg-cluster-primary primary 1 1 1 0

Instances status

Name Database Size Current LSN Replication role Status QoS Manager Version Node

---- ------------- ----------- ---------------- ------ --- --------------- ----

my-pg-cluster-1 37 MB 0/26000000 Primary OK BestEffort 1.23.1 minikube

my-pg-cluster-2 37 MB 0/26000000 Standby (async) OK BestEffort 1.23.1 minikube

my-pg-cluster-3 37 MB 0/26000000 Standby (async) OK BestEffort 1.23.1 minikube

We have a primary instance running in pod my-pg-cluster-1, and we have two replicas in asynchronous mode running in pods my-pg-cluster-2 and my-pg-cluster-3. Let’s assume we have an increasing workload and we want to have two more replicas. There are two ways in which you can do this. The first one is to change the configuration of the cluster in the yaml and then re-apply the configuration. This is the configuration as it is now:

apiVersion: postgresql.cnpg.io/v1

kind: Cluster

metadata:

name: my-pg-cluster

spec:

instances: 3

bootstrap:

initdb:

database: db1

owner: db1

dataChecksums: true

walSegmentSize: 32

localeCollate: 'en_US.utf8'

localeCType: 'en_US.utf8'

postInitSQL:

- create user db2

- create database db2 with owner = db2

postgresql:

parameters:

work_mem: "12MB"

pg_stat_statements.max: "2500"

pg_hba:

- host all all 192.168.122.0/24 scram-sha-256

storage:

size: 1Gi

All we need to do is to change the number of instances we want to have. With the current value of three, we get one primary and two replicas. If we want to have two more replicas, change this to five and re-apply:

minicube@micro-minicube:~> grep instances pg.yaml

instances: 5

minicube@micro-minicube:~> kubectl apply -f pg.yaml

cluster.postgresql.cnpg.io/my-pg-cluster configured

By monitoring the pods you can follow the progress of bringing up two new pods and attaching the replicas to the current cluster:

minicube@micro-minicube:~> kubectl get pods

NAME READY STATUS RESTARTS AGE

my-pg-cluster-1 1/1 Running 1 (32m ago) 2d1h

my-pg-cluster-2 1/1 Running 1 (32m ago) 2d

my-pg-cluster-3 1/1 Running 1 (32m ago) 2d

my-pg-cluster-4 0/1 PodInitializing 0 3s

my-pg-cluster-4-join-kqgwp 0/1 Completed 0 11s

minicube@micro-minicube:~> kubectl get pods

NAME READY STATUS RESTARTS AGE

my-pg-cluster-1 1/1 Running 1 (33m ago) 2d1h

my-pg-cluster-2 1/1 Running 1 (33m ago) 2d

my-pg-cluster-3 1/1 Running 1 (33m ago) 2d

my-pg-cluster-4 1/1 Running 0 42s

my-pg-cluster-5 1/1 Running 0 19s

Now we see five pods, as requested, and looking at the PostgreSQL streaming replication configuration confirms that we now have four replicas:

minicube@micro-minicube:~> kubectl-cnpg status my-pg-cluster

Cluster Summary

Name: my-pg-cluster

Namespace: default

System ID: 7378131726640287762

PostgreSQL Image: ghcr.io/cloudnative-pg/postgresql:16.2

Primary instance: my-pg-cluster-1

Primary start time: 2024-06-08 13:59:26 +0000 UTC (uptime 88h43m54s)

Status: Cluster in healthy state

Instances: 5

Ready instances: 5

Current Write LSN: 0/2C000060 (Timeline: 1 - WAL File: 000000010000000000000016)

Certificates Status

Certificate Name Expiration Date Days Left Until Expiration

---------------- --------------- --------------------------

my-pg-cluster-ca 2024-09-06 13:54:17 +0000 UTC 86.30

my-pg-cluster-replication 2024-09-06 13:54:17 +0000 UTC 86.30

my-pg-cluster-server 2024-09-06 13:54:17 +0000 UTC 86.30

Continuous Backup status

Not configured

Physical backups

No running physical backups found

Streaming Replication status

Replication Slots Enabled

Name Sent LSN Write LSN Flush LSN Replay LSN Write Lag Flush Lag Replay Lag State Sync State Sync Priority Replication Slot

---- -------- --------- --------- ---------- --------- --------- ---------- ----- ---------- ------------- ----------------

my-pg-cluster-2 0/2C000060 0/2C000060 0/2C000060 0/2C000060 00:00:00 00:00:00 00:00:00 streaming async 0 active

my-pg-cluster-3 0/2C000060 0/2C000060 0/2C000060 0/2C000060 00:00:00 00:00:00 00:00:00 streaming async 0 active

my-pg-cluster-4 0/2C000060 0/2C000060 0/2C000060 0/2C000060 00:00:00 00:00:00 00:00:00 streaming async 0 active

my-pg-cluster-5 0/2C000060 0/2C000060 0/2C000060 0/2C000060 00:00:00 00:00:00 00:00:00 streaming async 0 active

Unmanaged Replication Slot Status

No unmanaged replication slots found

Managed roles status

No roles managed

Tablespaces status

No managed tablespaces

Pod Disruption Budgets status

Name Role Expected Pods Current Healthy Minimum Desired Healthy Disruptions Allowed

---- ---- ------------- --------------- ----------------------- -------------------

my-pg-cluster replica 4 4 3 1

my-pg-cluster-primary primary 1 1 1 0

Instances status

Name Database Size Current LSN Replication role Status QoS Manager Version Node

---- ------------- ----------- ---------------- ------ --- --------------- ----

my-pg-cluster-1 37 MB 0/2C000060 Primary OK BestEffort 1.23.1 minikube

my-pg-cluster-2 37 MB 0/2C000060 Standby (async) OK BestEffort 1.23.1 minikube

my-pg-cluster-3 37 MB 0/2C000060 Standby (async) OK BestEffort 1.23.1 minikube

my-pg-cluster-4 37 MB 0/2C000060 Standby (async) OK BestEffort 1.23.1 minikube

my-pg-cluster-5 37 MB 0/2C000060 Standby (async) OK BestEffort 1.23.1 minikube

If you want to scale this down again (maybe because the workload decreased), you can do that in the same way by reducing the number of instances from five to three in the cluster definition, or by directly scaling the cluster down with kubectl:

minicube@micro-minicube:~> kubectl scale --replicas=2 -f pg.yaml

cluster.postgresql.cnpg.io/my-pg-cluster scaled

Attention: Replicas in this context does not mean streaming replication replicas. It means replicas in the context of Kubernetes, so if you do it like above, the result will be one primary and one replica:

minicube@micro-minicube:~> kubectl get pods

NAME READY STATUS RESTARTS AGE

my-pg-cluster-1 1/1 Running 1 (39m ago) 2d1h

my-pg-cluster-2 1/1 Running 1 (39m ago) 2d1h

What you probably really want is this (to get back to the initial state of the cluster):

minicube@micro-minicube:~> kubectl scale --replicas=3 -f pg.yaml

cluster.postgresql.cnpg.io/my-pg-cluster scaled

minicube@micro-minicube:~> kubectl get pods

NAME READY STATUS RESTARTS AGE

my-pg-cluster-1 1/1 Running 1 (41m ago) 2d1h

my-pg-cluster-2 1/1 Running 1 (41m ago) 2d1h

my-pg-cluster-6-join-747nx 0/1 Pending 0 1s

minicube@micro-minicube:~> kubectl get pods

NAME READY STATUS RESTARTS AGE

my-pg-cluster-1 1/1 Running 1 (41m ago) 2d1h

my-pg-cluster-2 1/1 Running 1 (41m ago) 2d1h

my-pg-cluster-6-join-747nx 1/1 Running 0 5s

minicube@micro-minicube:~> kubectl get pods

NAME READY STATUS RESTARTS AGE

my-pg-cluster-1 1/1 Running 1 (42m ago) 2d1h

my-pg-cluster-2 1/1 Running 1 (42m ago) 2d1h

my-pg-cluster-6 0/1 Running 0 5s

my-pg-cluster-6-join-747nx 0/1 Completed 0 14s

...

minicube@micro-minicube:~> kubectl get pods

NAME READY STATUS RESTARTS AGE

my-pg-cluster-1 1/1 Running 1 (42m ago) 2d1h

my-pg-cluster-2 1/1 Running 1 (42m ago) 2d1h

my-pg-cluster-6 1/1 Running 0 16s

What you shouldn’t do is to mix both ways of scaling, for one reason: If you scale up or down by using “kubectl scale”, this will not modify your cluster configuration file. There we still have five instances:

minicube@micro-minicube:~> grep instances pg.yaml

instances: 5

Our recommendation is, to do this only by modifying the configuration and re-apply afterwards. This ensures, that you always have the “reality” in the configuration file, and not a mix of live state and desired state.

In the next we’ll look into storage, because you want your databases to be persistent and fast.

L’article CloudNativePG – Scaling up and down est apparu en premier sur dbi Blog.

CloudNativePG – The kubectl plugin